Welcome to the Yocto Project Documentation

Yocto Project Quick Build

Welcome!

This short document steps you through the process for a typical image build using the Yocto Project. The document also introduces how to configure a build for specific hardware. You will use Yocto Project to build a reference embedded OS called Poky.

Note

The examples in this paper assume you are using a native Linux system running a recent Ubuntu Linux distribution. If the machine you want to use Yocto Project on to build an image (Build Host) is not a native Linux system, you can still perform these steps by using CROss PlatformS (CROPS) and setting up a Poky container. See the Setting Up to Use CROss PlatformS (CROPS) section in the Yocto Project Development Tasks Manual for more information.

You may use version 2 of Windows Subsystem For Linux (WSL 2) to set up a build host using Windows 10 or later, Windows Server 2019 or later. See the Setting Up to Use Windows Subsystem For Linux (WSL 2) section in the Yocto Project Development Tasks Manual for more information.

If you want more conceptual or background information on the Yocto Project, see the Yocto Project Overview and Concepts Manual.

Compatible Linux Distribution

Make sure your Build Host meets the following requirements:

At least 90 Gbytes of free disk space, though much more will help to run multiple builds and increase performance by reusing build artifacts.

At least 8 Gbytes of RAM, though a modern modern build host with as much RAM and as many CPU cores as possible is strongly recommended to maximize build performance.

Runs a supported Linux distribution (i.e. recent releases of Fedora, openSUSE, CentOS, Debian, or Ubuntu). For a list of Linux distributions that support the Yocto Project, see the Supported Linux Distributions section in the Yocto Project Reference Manual. For detailed information on preparing your build host, see the Preparing the Build Host section in the Yocto Project Development Tasks Manual.

Git 1.8.3.1 or greater

tar 1.28 or greater

Python 3.8.0 or greater.

gcc 8.0 or greater.

GNU make 4.0 or greater

If your build host does not meet any of these three listed version requirements, you can take steps to prepare the system so that you can still use the Yocto Project. See the Required Git, tar, Python, make and gcc Versions section in the Yocto Project Reference Manual for information.

Build Host Packages

You must install essential host packages on your build host. The following command installs the host packages based on an Ubuntu distribution:

$ sudo apt install gawk wget git diffstat unzip texinfo gcc build-essential chrpath socat cpio python3 python3-pip python3-pexpect xz-utils debianutils iputils-ping python3-git python3-jinja2 libegl1-mesa libsdl1.2-dev python3-subunit mesa-common-dev zstd liblz4-tool file locales libacl1

$ sudo locale-gen en_US.UTF-8

Note

For host package requirements on all supported Linux distributions, see the Required Packages for the Build Host section in the Yocto Project Reference Manual.

Use Git to Clone Poky

Once you complete the setup instructions for your machine, you need to get a copy of the Poky repository on your build host. Use the following commands to clone the Poky repository.

$ git clone git://git.yoctoproject.org/poky

Cloning into 'poky'...

remote: Counting

objects: 432160, done. remote: Compressing objects: 100%

(102056/102056), done. remote: Total 432160 (delta 323116), reused

432037 (delta 323000) Receiving objects: 100% (432160/432160), 153.81 MiB | 8.54 MiB/s, done.

Resolving deltas: 100% (323116/323116), done.

Checking connectivity... done.

Go to Releases wiki page, and choose a release

codename (such as scarthgap), corresponding to either the

latest stable release or a Long Term Support release.

Then move to the poky directory and take a look at existing branches:

$ cd poky

$ git branch -a

.

.

.

remotes/origin/HEAD -> origin/master

remotes/origin/dunfell

remotes/origin/dunfell-next

.

.

.

remotes/origin/gatesgarth

remotes/origin/gatesgarth-next

.

.

.

remotes/origin/master

remotes/origin/master-next

.

.

.

For this example, check out the scarthgap branch based on the

Scarthgap release:

$ git checkout -t origin/scarthgap -b my-scarthgap

Branch 'my-scarthgap' set up to track remote branch 'scarthgap' from 'origin'.

Switched to a new branch 'my-scarthgap'

The previous Git checkout command creates a local branch named

my-scarthgap. The files available to you in that branch

exactly match the repository’s files in the scarthgap

release branch.

Note that you can regularly type the following command in the same directory to keep your local files in sync with the release branch:

$ git pull

For more options and information about accessing Yocto Project related repositories, see the Locating Yocto Project Source Files section in the Yocto Project Development Tasks Manual.

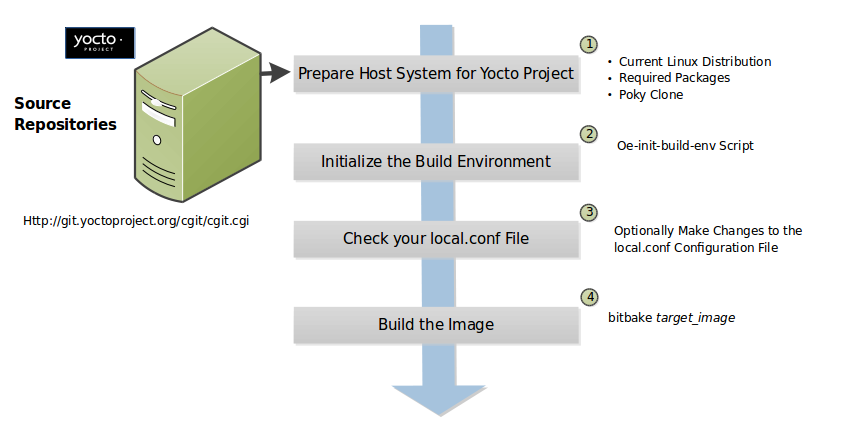

Building Your Image

Use the following steps to build your image. The build process creates an entire Linux distribution, including the toolchain, from source.

Note

If you are working behind a firewall and your build host is not set up for proxies, you could encounter problems with the build process when fetching source code (e.g. fetcher failures or Git failures).

If you do not know your proxy settings, consult your local network infrastructure resources and get that information. A good starting point could also be to check your web browser settings. Finally, you can find more information on the “Working Behind a Network Proxy” page of the Yocto Project Wiki.

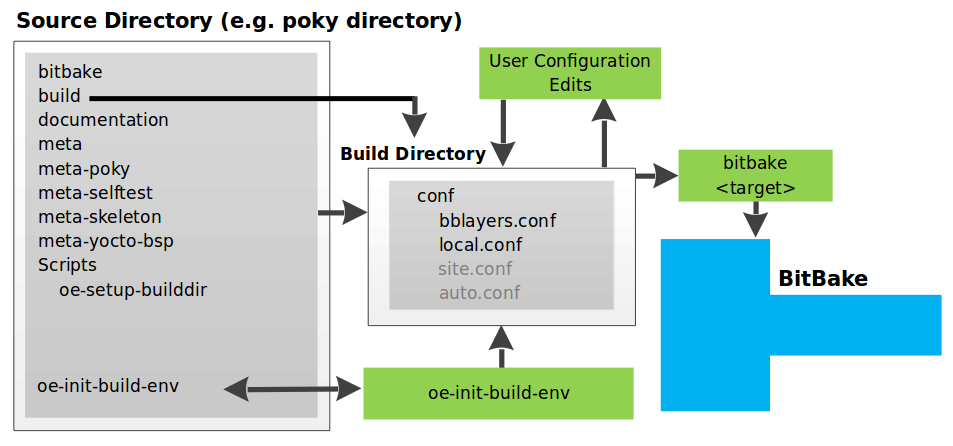

Initialize the Build Environment: From within the

pokydirectory, run the oe-init-build-env environment setup script to define Yocto Project’s build environment on your build host.$ cd poky $ source oe-init-build-env You had no conf/local.conf file. This configuration file has therefore been created for you with some default values. You may wish to edit it to, for example, select a different MACHINE (target hardware). See conf/local.conf for more information as common configuration options are commented. You had no conf/bblayers.conf file. This configuration file has therefore been created for you with some default values. To add additional metadata layers into your configuration please add entries to conf/bblayers.conf. The Yocto Project has extensive documentation about OE including a reference manual which can be found at: https://docs.yoctoproject.org For more information about OpenEmbedded see their website: https://www.openembedded.org/ ### Shell environment set up for builds. ### You can now run 'bitbake <target>' Common targets are: core-image-minimal core-image-full-cmdline core-image-sato core-image-weston meta-toolchain meta-ide-support You can also run generated QEMU images with a command like 'runqemu qemux86-64' Other commonly useful commands are: - 'devtool' and 'recipetool' handle common recipe tasks - 'bitbake-layers' handles common layer tasks - 'oe-pkgdata-util' handles common target package tasks

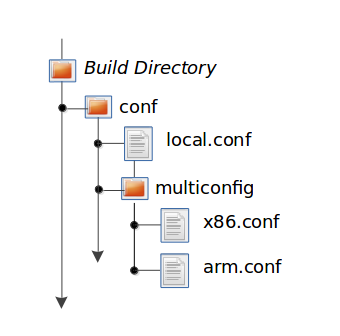

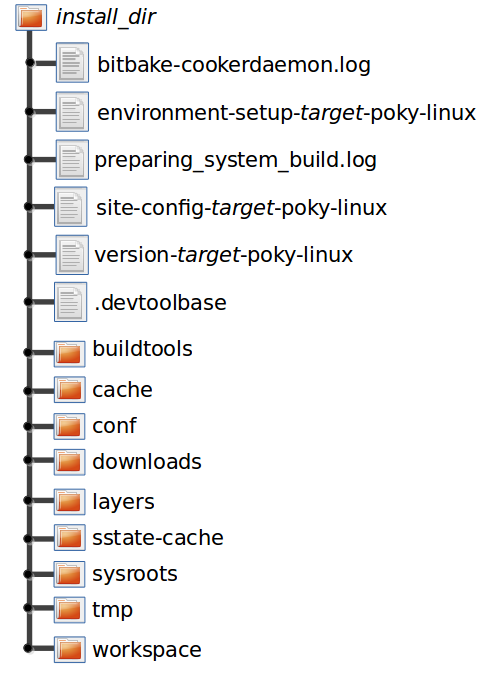

Among other things, the script creates the Build Directory, which is

buildin this case and is located in the Source Directory. After the script runs, your current working directory is set to the Build Directory. Later, when the build completes, the Build Directory contains all the files created during the build.Examine Your Local Configuration File: When you set up the build environment, a local configuration file named

local.confbecomes available in aconfsubdirectory of the Build Directory. For this example, the defaults are set to build for aqemux86target, which is suitable for emulation. The package manager used is set to the RPM package manager.Tip

You can significantly speed up your build and guard against fetcher failures by using Shared State Cache mirrors and enabling Hash Equivalence. This way, you can use pre-built artifacts rather than building them. This is relevant only when your network and the server that you use can download these artifacts faster than you would be able to build them.

To use such mirrors, uncomment the below lines in your

conf/local.conffile in the Build Directory:BB_HASHSERVE_UPSTREAM = "hashserv.yocto.io:8687" SSTATE_MIRRORS ?= "file://.* http://cdn.jsdelivr.net/yocto/sstate/all/PATH;downloadfilename=PATH" BB_HASHSERVE = "auto" BB_SIGNATURE_HANDLER = "OEEquivHash"

Start the Build: Continue with the following command to build an OS image for the target, which is

core-image-satoin this example:$ bitbake core-image-sato

For information on using the

bitbakecommand, see the BitBake section in the Yocto Project Overview and Concepts Manual, or see The BitBake Command in the BitBake User Manual.Simulate Your Image Using QEMU: Once this particular image is built, you can start QEMU, which is a Quick EMUlator that ships with the Yocto Project:

$ runqemu qemux86-64

If you want to learn more about running QEMU, see the Using the Quick EMUlator (QEMU) chapter in the Yocto Project Development Tasks Manual.

Exit QEMU: Exit QEMU by either clicking on the shutdown icon or by typing

Ctrl-Cin the QEMU transcript window from which you evoked QEMU.

Customizing Your Build for Specific Hardware

So far, all you have done is quickly built an image suitable for emulation only. This section shows you how to customize your build for specific hardware by adding a hardware layer into the Yocto Project development environment.

In general, layers are repositories that contain related sets of instructions and configurations that tell the Yocto Project what to do. Isolating related metadata into functionally specific layers facilitates modular development and makes it easier to reuse the layer metadata.

Note

By convention, layer names start with the string “meta-“.

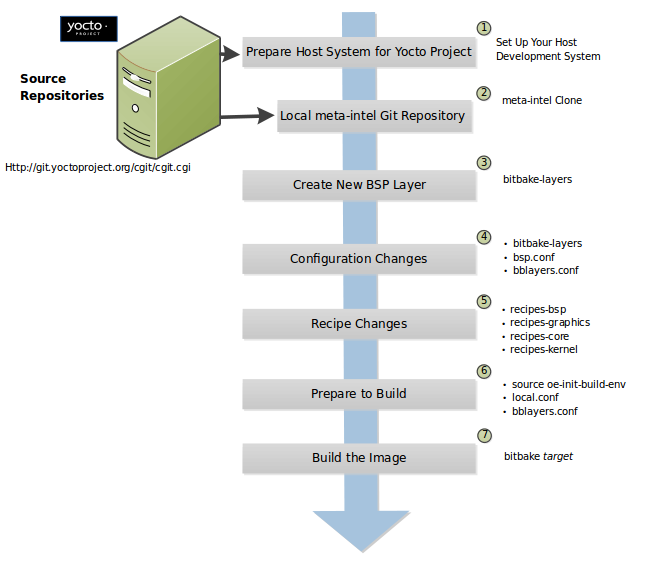

Follow these steps to add a hardware layer:

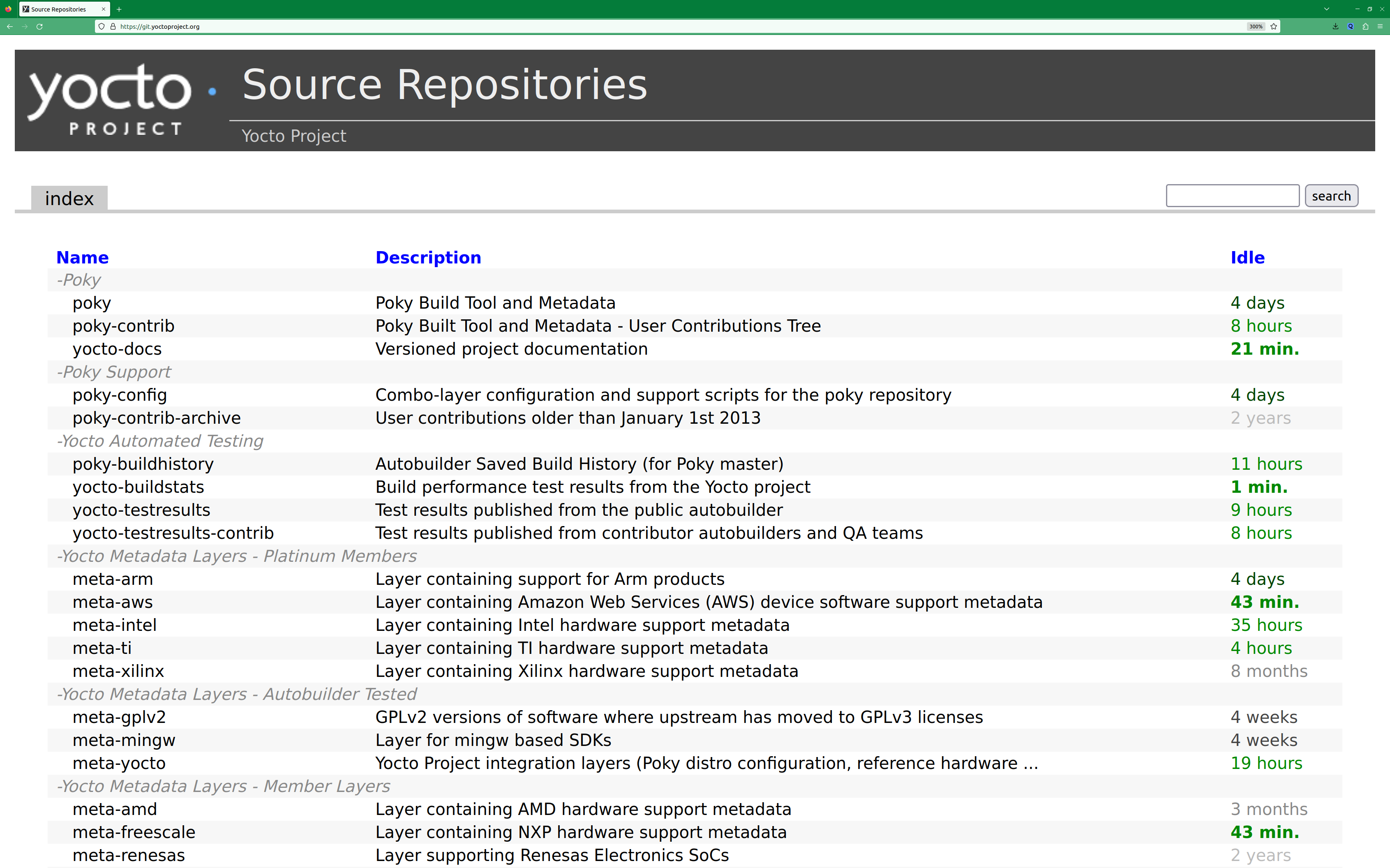

Find a Layer: Many hardware layers are available. The Yocto Project Source Repositories has many hardware layers. This example adds the meta-altera hardware layer.

Clone the Layer: Use Git to make a local copy of the layer on your machine. You can put the copy in the top level of the copy of the Poky repository created earlier:

$ cd poky $ git clone https://github.com/kraj/meta-altera.git Cloning into 'meta-altera'... remote: Counting objects: 25170, done. remote: Compressing objects: 100% (350/350), done. remote: Total 25170 (delta 645), reused 719 (delta 538), pack-reused 24219 Receiving objects: 100% (25170/25170), 41.02 MiB | 1.64 MiB/s, done. Resolving deltas: 100% (13385/13385), done. Checking connectivity... done.

The hardware layer is now available next to other layers inside the Poky reference repository on your build host as

meta-alteraand contains all the metadata needed to support hardware from Altera, which is owned by Intel.Note

It is recommended for layers to have a branch per Yocto Project release. Please make sure to checkout the layer branch supporting the Yocto Project release you’re using.

Change the Configuration to Build for a Specific Machine: The MACHINE variable in the

local.conffile specifies the machine for the build. For this example, set the MACHINE variable tocyclone5. These configurations are used: https://github.com/kraj/meta-altera/blob/master/conf/machine/cyclone5.conf.Note

See the “Examine Your Local Configuration File” step earlier for more information on configuring the build.

Add Your Layer to the Layer Configuration File: Before you can use a layer during a build, you must add it to your

bblayers.conffile, which is found in the Build Directoryconfdirectory.Use the

bitbake-layers add-layercommand to add the layer to the configuration file:$ cd poky/build $ bitbake-layers add-layer ../meta-altera NOTE: Starting bitbake server... Parsing recipes: 100% |##################################################################| Time: 0:00:32 Parsing of 918 .bb files complete (0 cached, 918 parsed). 1401 targets, 123 skipped, 0 masked, 0 errors.

You can find more information on adding layers in the Adding a Layer Using the bitbake-layers Script section.

Completing these steps has added the meta-altera layer to your Yocto

Project development environment and configured it to build for the

cyclone5 machine.

Note

The previous steps are for demonstration purposes only. If you were

to attempt to build an image for the cyclone5 machine, you should

read the Altera README.

Creating Your Own General Layer

Maybe you have an application or specific set of behaviors you need to

isolate. You can create your own general layer using the

bitbake-layers create-layer command. The tool automates layer

creation by setting up a subdirectory with a layer.conf

configuration file, a recipes-example subdirectory that contains an

example.bb recipe, a licensing file, and a README.

The following commands run the tool to create a layer named

meta-mylayer in the poky directory:

$ cd poky

$ bitbake-layers create-layer meta-mylayer

NOTE: Starting bitbake server...

Add your new layer with 'bitbake-layers add-layer meta-mylayer'

For more information on layers and how to create them, see the Creating a General Layer Using the bitbake-layers Script section in the Yocto Project Development Tasks Manual.

Where To Go Next

Now that you have experienced using the Yocto Project, you might be asking yourself “What now?”. The Yocto Project has many sources of information including the website, wiki pages, and user manuals:

Website: The Yocto Project Website provides background information, the latest builds, breaking news, full development documentation, and access to a rich Yocto Project Development Community into which you can tap.

Video Seminar: The Introduction to the Yocto Project and BitBake, Part 1 and Introduction to the Yocto Project and BitBake, Part 2 videos offer a video seminar introducing you to the most important aspects of developing a custom embedded Linux distribution with the Yocto Project.

Yocto Project Overview and Concepts Manual: The Yocto Project Overview and Concepts Manual is a great place to start to learn about the Yocto Project. This manual introduces you to the Yocto Project and its development environment. The manual also provides conceptual information for various aspects of the Yocto Project.

Yocto Project Wiki: The Yocto Project Wiki provides additional information on where to go next when ramping up with the Yocto Project, release information, project planning, and QA information.

Yocto Project Mailing Lists: Related mailing lists provide a forum for discussion, patch submission and announcements. There are several mailing lists grouped by topic. See the Mailing lists section in the Yocto Project Reference Manual for a complete list of Yocto Project mailing lists.

Comprehensive List of Links and Other Documentation: The Links and Related Documentation section in the Yocto Project Reference Manual provides a comprehensive list of all related links and other user documentation.

Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons Attribution-Share Alike 2.0 UK: England & Wales as published by Creative Commons.

To report any inaccuracies or problems with this (or any other Yocto Project)

manual, or to send additions or changes, please send email/patches to the Yocto

Project documentation mailing list at docs@lists.yoctoproject.org or

log into the Libera Chat #yocto channel.

What I wish I’d known about Yocto Project

Note

Before reading further, make sure you’ve taken a look at the Software Overview page which presents the definitions for many of the terms referenced here. Also, know that some of the information here won’t make sense now, but as you start developing, it is the information you’ll want to keep close at hand. These are best known methods for working with Yocto Project and they are updated regularly.

Using the Yocto Project is fairly easy, until something goes wrong. Without an understanding of how the build process works, you’ll find yourself trying to troubleshoot “a black box”. Here are a few items that new users wished they had known before embarking on their first build with Yocto Project. Feel free to contact us with other suggestions.

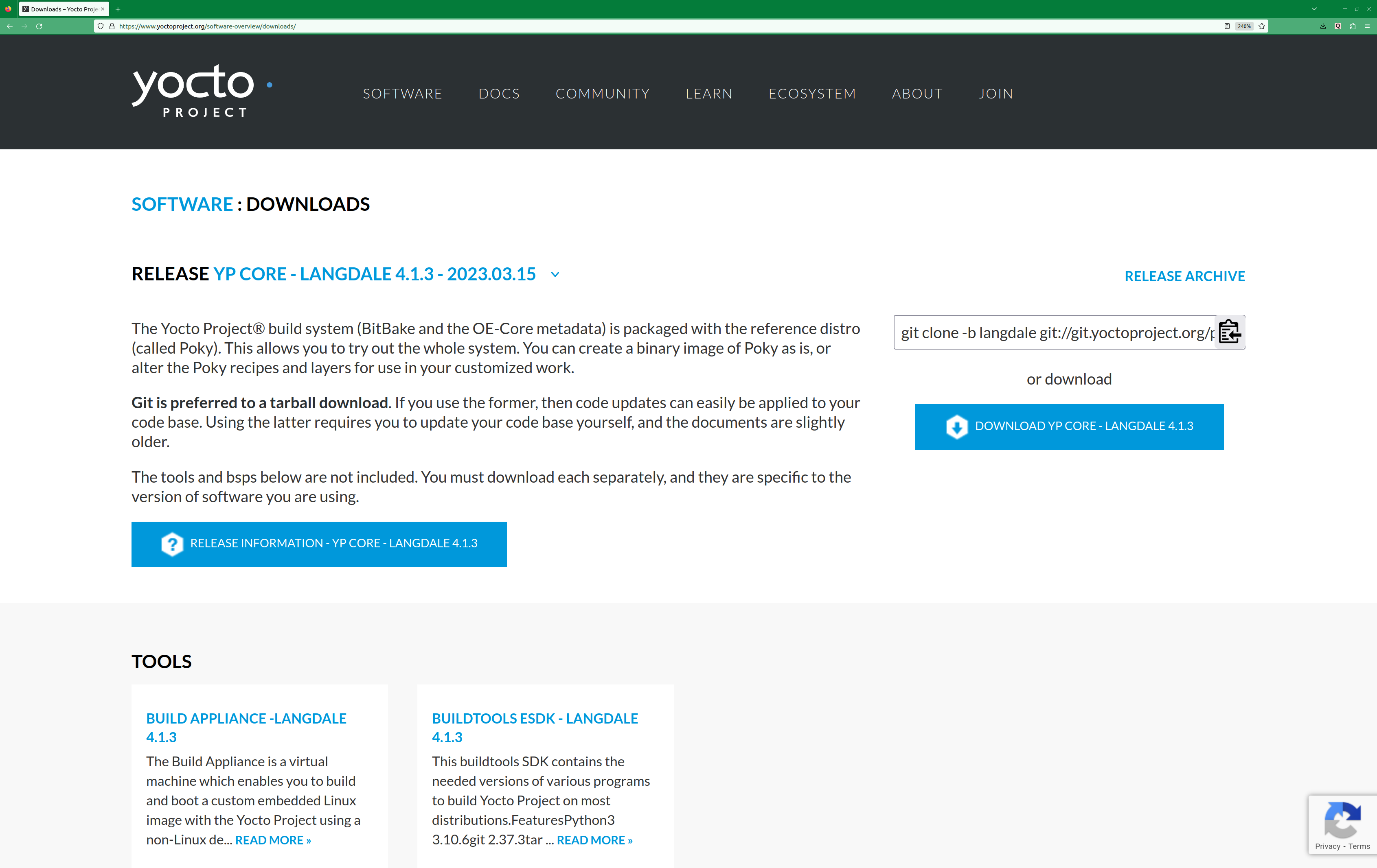

Use Git, not the tarball download: If you use git the software will be automatically updated with bug updates because of how git works. If you download the tarball instead, you will need to be responsible for your own updates.

Get to know the layer index: All layers can be found in the layer index. Layers which have applied for Yocto Project Compatible status (structure continuity assurance and testing) can be found in the Yocto Project Compatible Layers page. Generally check the Compatible layer index first, and if you don’t find the necessary layer check the general layer index. The layer index is an original artifact from the Open Embedded Project. As such, that index doesn’t have the curating and testing that the Yocto Project provides on Yocto Project Compatible layer list, but the latter has fewer entries. Know that when you start searching in the layer index that not all layers have the same level of maturity, validation, or usability. Nor do searches prioritize displayed results. There is no easy way to help you through the process of choosing the best layer to suit your needs. Consequently, it is often trial and error, checking the mailing lists, or working with other developers through collaboration rooms that can help you make good choices.

Use existing BSP layers from silicon vendors when possible: Intel, TI, NXP and others have information on what BSP layers to use with their silicon. These layers have names such as “meta-intel” or “meta-ti”. Try not to build layers from scratch. If you do have custom silicon, use one of these layers as a guide or template and familiarize yourself with the Yocto Project Board Support Package Developer’s Guide.

Do not put everything into one layer: Use different layers to logically separate information in your build. As an example, you could have a BSP layer, a GUI layer, a distro configuration, middleware, or an application (e.g. “meta-filesystems”, “meta-python”, “meta-intel”, and so forth). Putting your entire build into one layer limits and complicates future customization and reuse. Isolating information into layers, on the other hand, helps keep simplify future customizations and reuse.

Never modify the POKY layer. Never. Ever. When you update to the next release, you’ll lose all of your work. ALL OF IT.

Don’t be fooled by documentation searching results: Yocto Project documentation is always being updated. Unfortunately, when you use Google to search for Yocto Project concepts or terms, Google consistently searches and retrieves older versions of Yocto Project manuals. For example, searching for a particular topic using Google could result in a “hit” on a Yocto Project manual that is several releases old. To be sure that you are using the most current Yocto Project documentation, use the drop-down menu at the top of any of its page.

Many developers look through the All-in-one ‘Mega’ Manual for a concept or term by doing a search through the whole page. This manual is a concatenation of the core set of Yocto Project manual. Thus, a simple string search using Ctrl-F in this manual produces all the “hits” for a desired term or concept. Once you find the area in which you are interested, you can display the actual manual, if desired. It is also possible to use the search bar in the menu or in the left navigation pane.

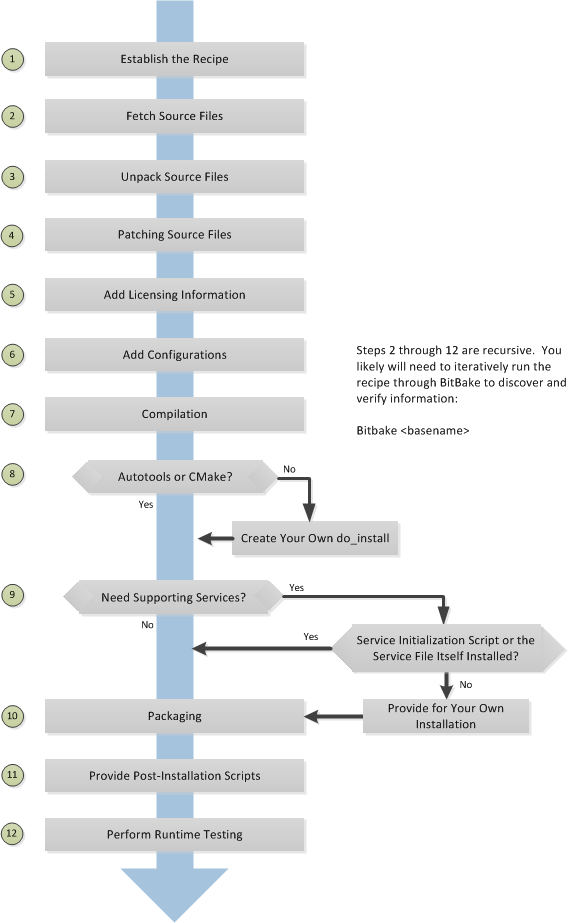

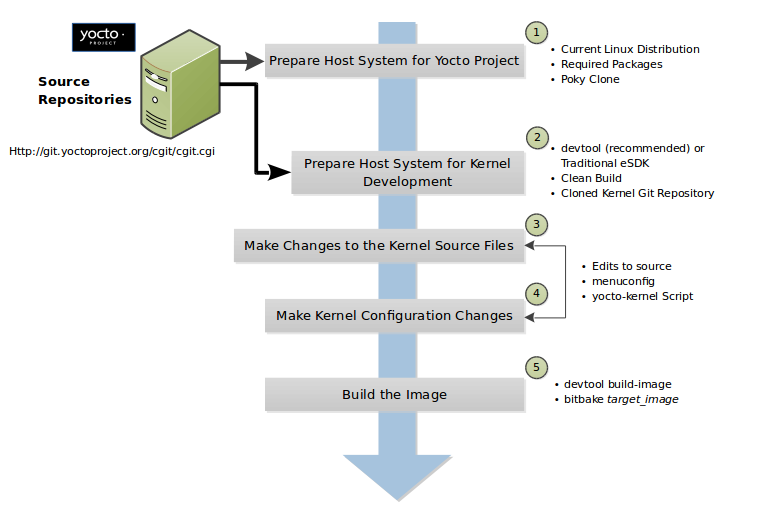

Understand the basic concepts of how the build system works: the workflow: Understanding the Yocto Project workflow is important as it can help you both pinpoint where trouble is occurring and how the build is breaking. The workflow breaks down into the following steps:

Fetch – get the source code

Extract – unpack the sources

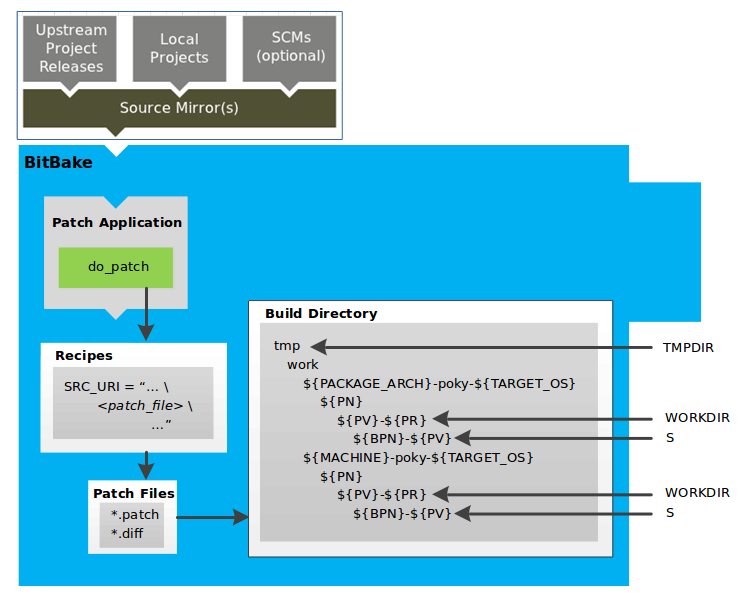

Patch – apply patches for bug fixes and new capability

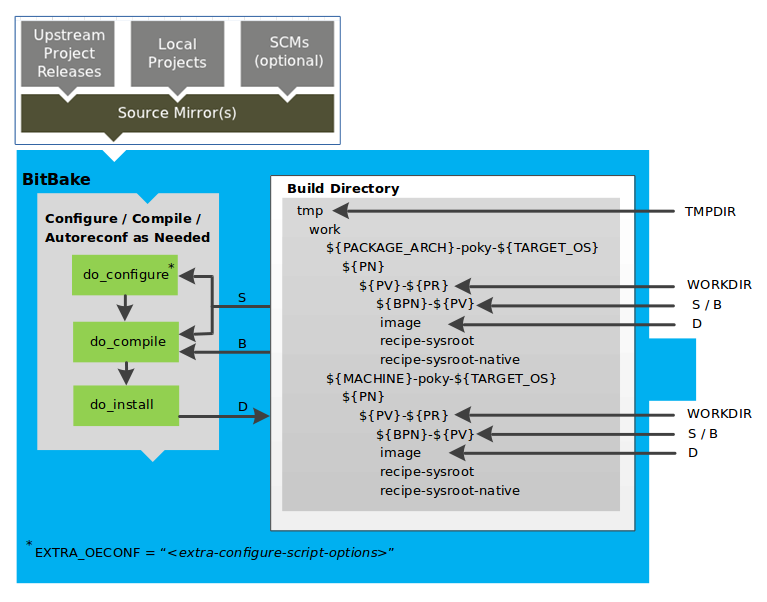

Configure – set up your environment specifications

Build – compile and link

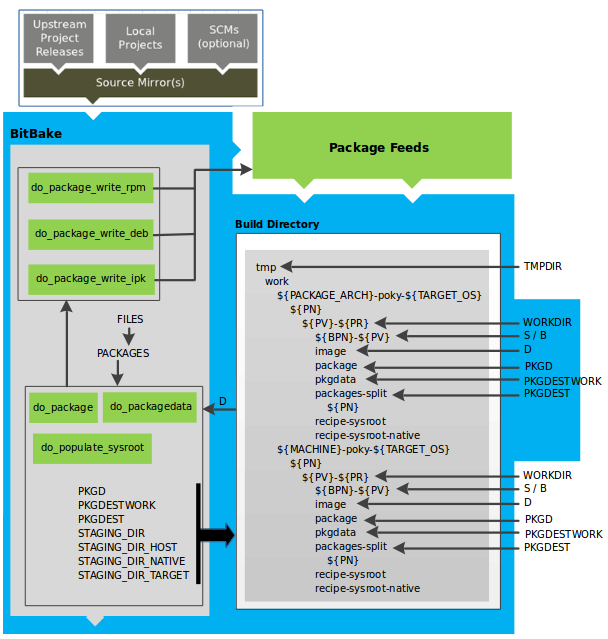

Install – copy files to target directories

Package – bundle files for installation

During “fetch”, there may be an inability to find code. During “extract”, there is likely an invalid zip or something similar. In other words, the function of a particular part of the workflow gives you an idea of what might be going wrong.

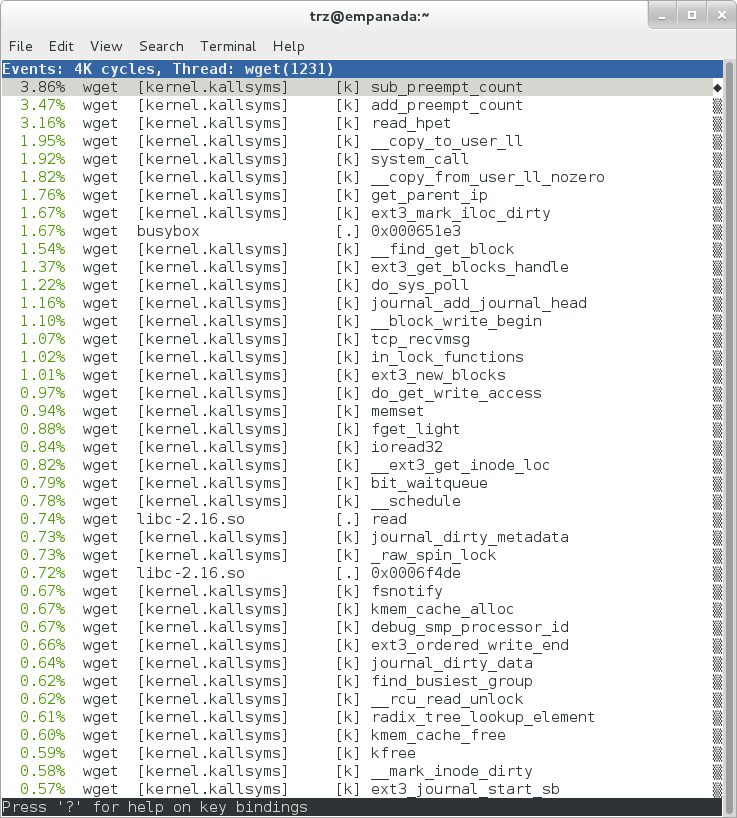

Know that you can generate a dependency graph and learn how to do it: A dependency graph shows dependencies between recipes, tasks, and targets. You can use the “-g” option with BitBake to generate this graph. When you start a build and the build breaks, you could see packages you have no clue about or have any idea why the build system has included them. The dependency graph can clarify that confusion. You can learn more about dependency graphs and how to generate them in the Generating Dependency Graphs section in the BitBake User Manual.

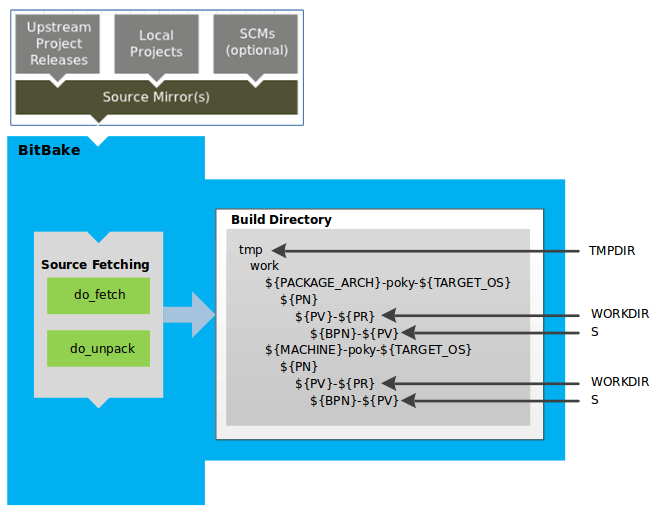

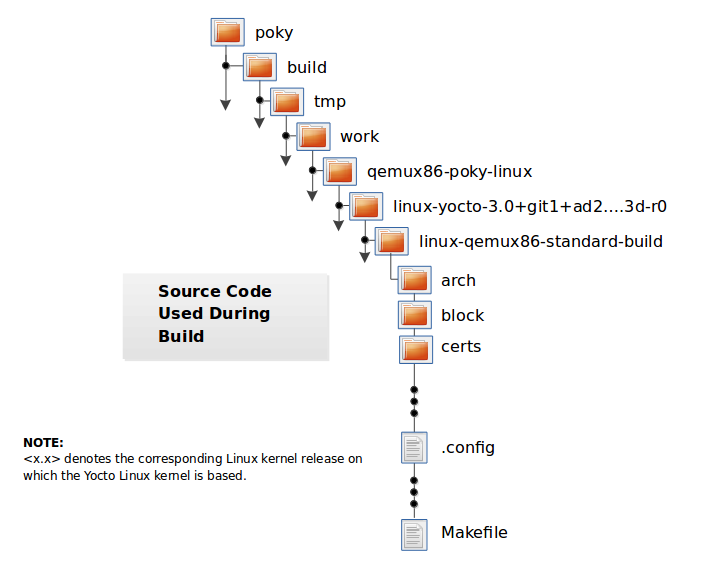

Here’s how you decode “magic” folder names in tmp/work: The build system fetches, unpacks, preprocesses, and builds. If something goes wrong, the build system reports to you directly the path to a folder where the temporary (build/tmp) files and packages reside resulting from the build. For a detailed example of this process, see the example. Unfortunately this example is on an earlier release of Yocto Project.

When you perform a build, you can use the “-u” BitBake command-line option to specify a user interface viewer into the dependency graph (e.g. knotty, ncurses, or taskexp) that helps you understand the build dependencies better.

You can build more than just images: You can build and run a specific task for a specific package (including devshell) or even a single recipe. When developers first start using the Yocto Project, the instructions found in the Yocto Project Quick Build show how to create an image and then run or flash that image. However, you can actually build just a single recipe. Thus, if some dependency or recipe isn’t working, you can just say “bitbake foo” where “foo” is the name for a specific recipe. As you become more advanced using the Yocto Project, and if builds are failing, it can be useful to make sure the fetch itself works as desired. Here are some valuable links: Using a Development Shell for information on how to build and run a specific task using devshell. Also, the SDK manual shows how to build out a specific recipe.

An ambiguous definition: Package vs Recipe: A recipe contains instructions the build system uses to create packages. Recipes and Packages are the difference between the front end and the result of the build process.

As mentioned, the build system takes the recipe and creates packages from the recipe’s instructions. The resulting packages are related to the one thing the recipe is building but are different parts (packages) of the build (i.e. the main package, the doc package, the debug symbols package, the separate utilities package, and so forth). The build system splits out the packages so that you don’t need to install the packages you don’t want or need, which is advantageous because you are building for small devices when developing for embedded and IoT.

You will want to learn about and know what’s packaged in the root filesystem.

Create your own image recipe: There are a number of ways to create your own image recipe. We suggest you create your own image recipe as opposed to appending an existing recipe. It is trivial and easy to write an image recipe. Again, do not try appending to an existing image recipe. Create your own and do it right from the start.

Finally, here is a list of the basic skills you will need as a systems developer. You must be able to:

deal with corporate proxies

add a package to an image

understand the difference between a recipe and package

build a package by itself and why that’s useful

find out what packages are created by a recipe

find out what files are in a package

find out what files are in an image

add an ssh server to an image (enable transferring of files to target)

know the anatomy of a recipe

know how to create and use layers

find recipes (with the OpenEmbedded Layer index)

understand difference between machine and distro settings

find and use the right BSP (machine) for your hardware

find examples of distro features and know where to set them

understanding the task pipeline and executing individual tasks

understand devtool and how it simplifies your workflow

improve build speeds with shared downloads and shared state cache

generate and understand a dependency graph

generate and understand BitBake environment

build an Extensible SDK for applications development

Depending on what you primary interests are with the Yocto Project, you could consider any of the following reading:

Look Through the Yocto Project Development Tasks Manual: This manual contains procedural information grouped to help you get set up, work with layers, customize images, write new recipes, work with libraries, and use QEMU. The information is task-based and spans the breadth of the Yocto Project. See the Yocto Project Development Tasks Manual.

Look Through the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual: This manual describes how to use both the standard SDK and the extensible SDK, which are used primarily for application development. The Using the Extensible SDK also provides example workflows that use devtool. See the section Using devtool in Your SDK Workflow for more information.

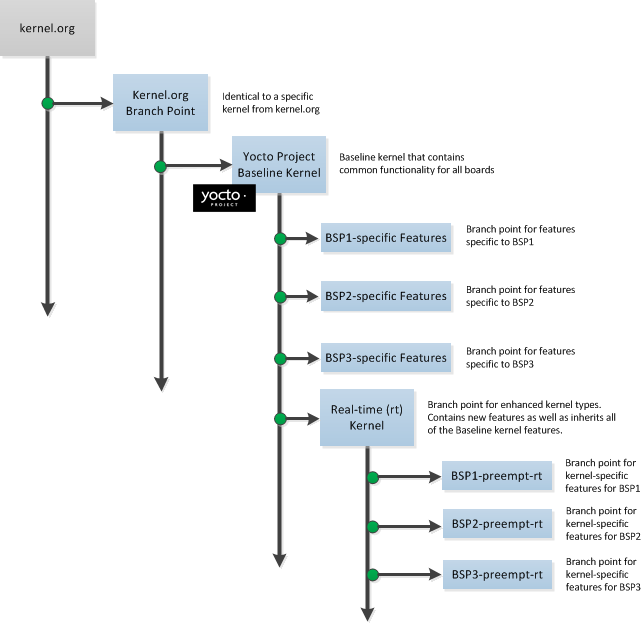

Learn About Kernel Development: If you want to see how to work with the kernel and understand Yocto Linux kernels, see the Yocto Project Linux Kernel Development Manual. This manual provides information on how to patch the kernel, modify kernel recipes, and configure the kernel.

Learn About Board Support Packages (BSPs): If you want to learn about BSPs, see the Yocto Project Board Support Package Developer’s Guide. This manual also provides an example BSP creation workflow. See the Board Support Packages (BSP) — Developer’s Guide section.

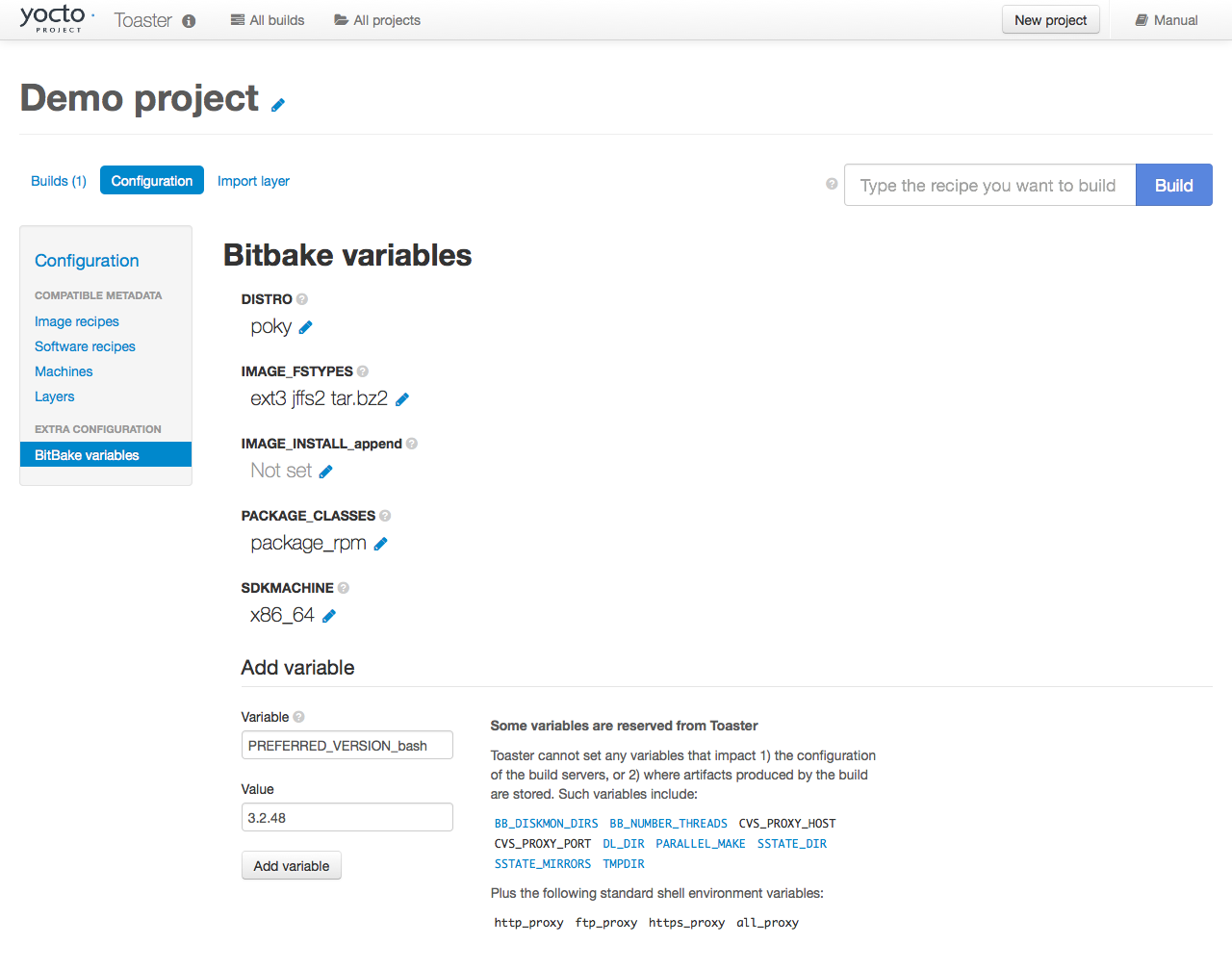

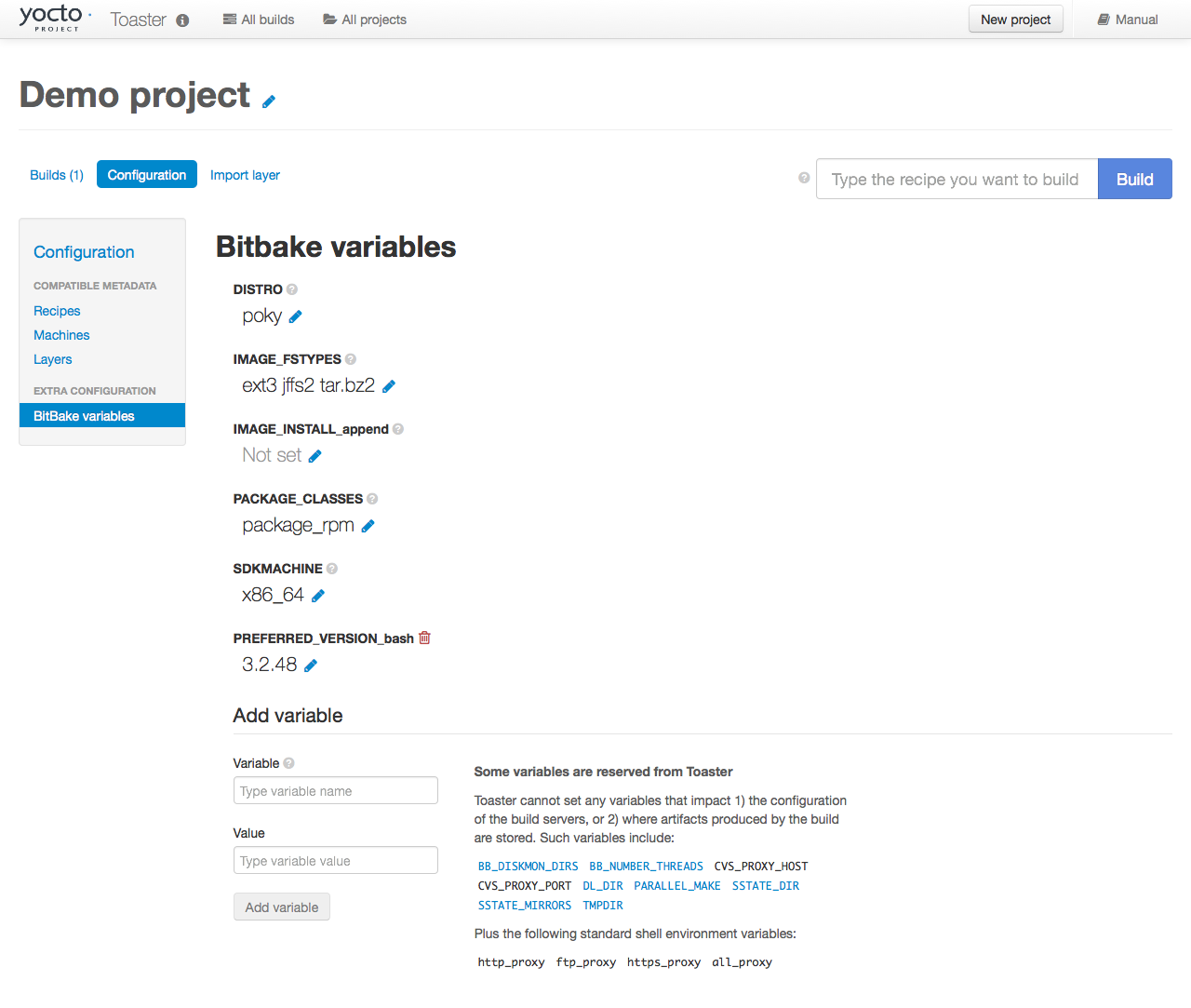

Learn About Toaster: Toaster is a web interface to the Yocto Project’s OpenEmbedded build system. If you are interested in using this type of interface to create images, see the Toaster User Manual.

Discover the VSCode extension: The Yocto Project BitBake extension for the Visual Studio Code IDE provides language features and commands for working with the Yocto Project. If you are interested in using this extension, visit its marketplace page.

Have Available the Yocto Project Reference Manual: Unlike the rest of the Yocto Project manual set, this manual is comprised of material suited for reference rather than procedures. You can get build details, a closer look at how the pieces of the Yocto Project development environment work together, information on various technical details, guidance on migrating to a newer Yocto Project release, reference material on the directory structure, classes, and tasks. The Yocto Project Reference Manual also contains a fairly comprehensive glossary of variables used within the Yocto Project.

Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons Attribution-Share Alike 2.0 UK: England & Wales as published by Creative Commons.

To report any inaccuracies or problems with this (or any other Yocto Project)

manual, or to send additions or changes, please send email/patches to the Yocto

Project documentation mailing list at docs@lists.yoctoproject.org or

log into the Libera Chat #yocto channel.

Transitioning to a custom environment for systems development

Note

So you’ve finished the Yocto Project Quick Build and glanced over the document What I wish I’d known about Yocto Project, the latter contains important information learned from other users. You’re well prepared. But now, as you are starting your own project, it isn’t exactly straightforward what to do. And, the documentation is daunting. We’ve put together a few hints to get you started.

Make a list of the processor, target board, technologies, and capabilities that will be part of your project. You will be finding layers with recipes and other metadata that support these things, and adding them to your configuration. (See #3)

Set up your board support. Even if you’re using custom hardware, it might be easier to start with an existing target board that uses the same processor or at least the same architecture as your custom hardware. Knowing the board already has a functioning Board Support Package (BSP) within the project makes it easier for you to get comfortable with project concepts.

Find and acquire the best BSP for your target. Use the Yocto Project Compatible Layers or even the OpenEmbedded Layer Index to find and acquire the best BSP for your target board. The Yocto Project layer index BSPs are regularly validated. The best place to get your first BSP is from your silicon manufacturer or board vendor – they can point you to their most qualified efforts. In general, for Intel silicon use meta-intel, for Texas Instruments use meta-ti, and so forth. Choose a BSP that has been tested with the same Yocto Project release that you’ve downloaded. Be aware that some BSPs may not be immediately supported on the very latest release, but they will be eventually.

You might want to start with the build specification that Poky provides (which is reference embedded distribution) and then add your newly chosen layers to that. Here is the information about adding layers.

Based on the layers you’ve chosen, make needed changes in your configuration. For instance, you’ve chosen a machine type and added in the corresponding BSP layer. You’ll then need to change the value of the MACHINE variable in your configuration file (build/local.conf) to point to that same machine type. There could be other layer-specific settings you need to change as well. Each layer has a

READMEdocument that you can look at for this type of usage information.Add a new layer for any custom recipes and metadata you create. Use the

bitbake-layers create-layertool for Yocto Project 2.4+ releases. If you are using a Yocto Project release earlier than 2.4, use theyocto-layer createtool. Thebitbake-layerstool also provides a number of other useful layer-related commands. See Creating a General Layer Using the bitbake-layers Script section.Create your own layer for the BSP you’re going to use. It is not common that you would need to create an entire BSP from scratch unless you have a really special device. Even if you are using an existing BSP, create your own layer for the BSP. For example, given a 64-bit x86-based machine, copy the conf/intel-corei7-64 definition and give the machine a relevant name (think board name, not product name). Make sure the layer configuration is dependent on the meta-intel layer (or at least, meta-intel remains in your bblayers.conf). Now you can put your custom BSP settings into your layer and you can re-use it for different applications.

Write your own recipe to build additional software support that isn’t already available in the form of a recipe. Creating your own recipe is especially important for custom application software that you want to run on your device. Writing new recipes is a process of refinement. Start by getting each step of the build process working beginning with fetching all the way through packaging. Next, run the software on your target and refine further as needed. See Writing a New Recipe in the Yocto Project Development Tasks Manual for more information.

Now you’re ready to create an image recipe. There are a number of ways to do this. However, it is strongly recommended that you have your own image recipe — don’t try appending to existing image recipes. Recipes for images are trivial to create and you usually want to fully customize their contents.

Build your image and refine it. Add what’s missing and fix anything that’s broken using your knowledge of the workflow to identify where issues might be occurring.

Consider creating your own distribution. When you get to a certain level of customization, consider creating your own distribution rather than using the default reference distribution.

Distribution settings define the packaging back-end (e.g. rpm or other) as well as the package feed and possibly the update solution. You would create your own distribution in a new layer inheriting from Poky but overriding what needs to change for your distribution. If you find yourself adding a lot of configuration to your local.conf file aside from paths and other typical local settings, it’s time to consider creating your own distribution.

You can add product specifications that can customize the distribution if needed in other layers. You can also add other functionality specific to the product. But to update the distribution, not individual products, you update the distribution feature through that layer.

Congratulations! You’re well on your way. Welcome to the Yocto Project community.

Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons Attribution-Share Alike 2.0 UK: England & Wales as published by Creative Commons.

To report any inaccuracies or problems with this (or any other Yocto Project)

manual, or to send additions or changes, please send email/patches to the Yocto

Project documentation mailing list at docs@lists.yoctoproject.org or

log into the Libera Chat #yocto channel.

Yocto Project Overview and Concepts Manual

1 The Yocto Project Overview and Concepts Manual

1.1 Welcome

Welcome to the Yocto Project Overview and Concepts Manual! This manual introduces the Yocto Project by providing concepts, software overviews, best-known-methods (BKMs), and any other high-level introductory information suitable for a new Yocto Project user.

Here is what you can get from this manual:

Introducing the Yocto Project: This chapter provides an introduction to the Yocto Project. You will learn about features and challenges of the Yocto Project, the layer model, components and tools, development methods, the Poky reference distribution, the OpenEmbedded build system workflow, and some basic Yocto terms.

The Yocto Project Development Environment: This chapter helps you get started understanding the Yocto Project development environment. You will learn about open source, development hosts, Yocto Project source repositories, workflows using Git and the Yocto Project, a Git primer, and information about licensing.

Yocto Project Concepts : This chapter presents various concepts regarding the Yocto Project. You can find conceptual information about components, development, cross-toolchains, and so forth.

This manual does not give you the following:

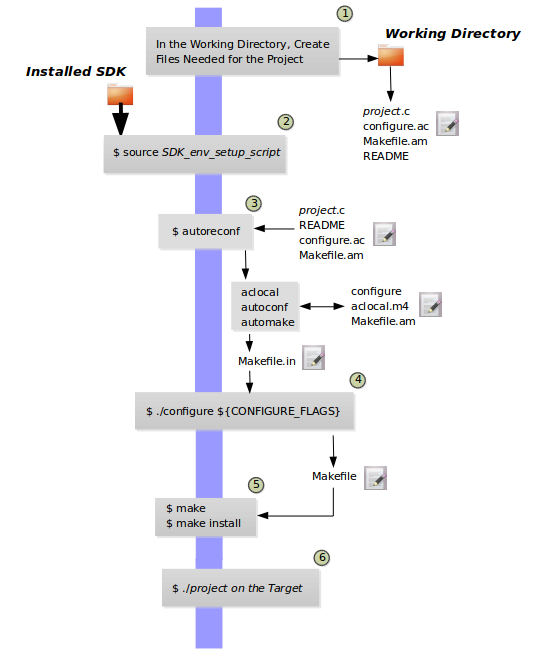

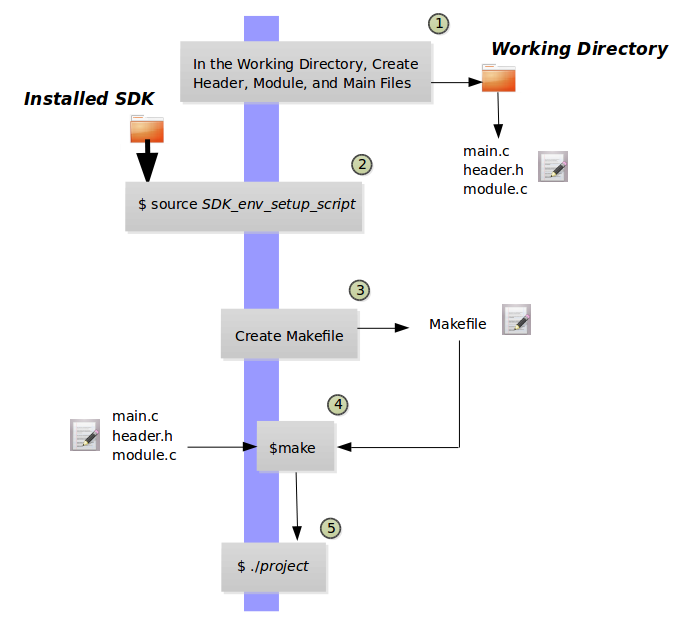

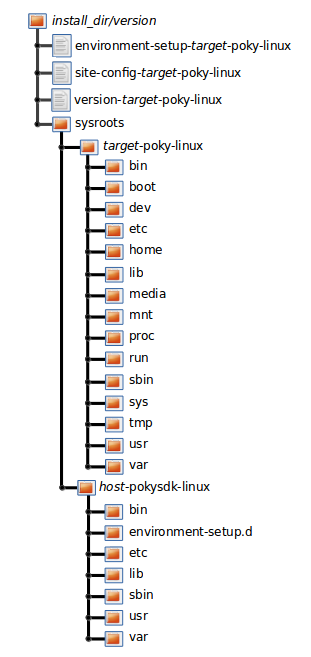

Step-by-step Instructions for Development Tasks: Instructional procedures reside in other manuals within the Yocto Project documentation set. For example, the Yocto Project Development Tasks Manual provides examples on how to perform various development tasks. As another example, the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual contains detailed instructions on how to install an SDK, which is used to develop applications for target hardware.

Reference Material: This type of material resides in an appropriate reference manual. For example, system variables are documented in the Yocto Project Reference Manual. As another example, the Yocto Project Board Support Package Developer’s Guide contains reference information on BSPs.

Detailed Public Information Not Specific to the Yocto Project: For example, exhaustive information on how to use the Source Control Manager Git is better covered with Internet searches and official Git Documentation than through the Yocto Project documentation.

1.2 Other Information

Because this manual presents information for many different topics, supplemental information is recommended for full comprehension. For additional introductory information on the Yocto Project, see the Yocto Project Website. If you want to build an image with no knowledge of Yocto Project as a way of quickly testing it out, see the Yocto Project Quick Build document. For a comprehensive list of links and other documentation, see the “Links and Related Documentation” section in the Yocto Project Reference Manual.

2 Introducing the Yocto Project

2.1 What is the Yocto Project?

The Yocto Project is an open source collaboration project that helps developers create custom Linux-based systems that are designed for embedded products regardless of the product’s hardware architecture. Yocto Project provides a flexible toolset and a development environment that allows embedded device developers across the world to collaborate through shared technologies, software stacks, configurations, and best practices used to create these tailored Linux images.

Thousands of developers worldwide have discovered that Yocto Project provides advantages in both systems and applications development, archival and management benefits, and customizations used for speed, footprint, and memory utilization. The project is a standard when it comes to delivering embedded software stacks. The project allows software customizations and build interchange for multiple hardware platforms as well as software stacks that can be maintained and scaled.

For further introductory information on the Yocto Project, you might be interested in this article by Drew Moseley and in this short introductory video.

The remainder of this section overviews advantages and challenges tied to the Yocto Project.

2.1.1 Features

Here are features and advantages of the Yocto Project:

Widely Adopted Across the Industry: Many semiconductor, operating system, software, and service vendors adopt and support the Yocto Project in their products and services. For a look at the Yocto Project community and the companies involved with the Yocto Project, see the “COMMUNITY” and “ECOSYSTEM” tabs on the Yocto Project home page.

Architecture Agnostic: Yocto Project supports Intel, ARM, MIPS, AMD, PPC and other architectures. Most ODMs, OSVs, and chip vendors create and supply BSPs that support their hardware. If you have custom silicon, you can create a BSP that supports that architecture.

Aside from broad architecture support, the Yocto Project fully supports a wide range of devices emulated by the Quick EMUlator (QEMU).

Images and Code Transfer Easily: Yocto Project output can easily move between architectures without moving to new development environments. Additionally, if you have used the Yocto Project to create an image or application and you find yourself not able to support it, commercial Linux vendors such as Wind River, Mentor Graphics, Timesys, and ENEA could take it and provide ongoing support. These vendors have offerings that are built using the Yocto Project.

Flexibility: Corporations use the Yocto Project many different ways. One example is to create an internal Linux distribution as a code base the corporation can use across multiple product groups. Through customization and layering, a project group can leverage the base Linux distribution to create a distribution that works for their product needs.

Ideal for Constrained Embedded and IoT devices: Unlike a full Linux distribution, you can use the Yocto Project to create exactly what you need for embedded devices. You only add the feature support or packages that you absolutely need for the device. For devices that have display hardware, you can use available system components such as X11, Wayland, GTK+, Qt, Clutter, and SDL (among others) to create a rich user experience. For devices that do not have a display or where you want to use alternative UI frameworks, you can choose to not build these components.

Comprehensive Toolchain Capabilities: Toolchains for supported architectures satisfy most use cases. However, if your hardware supports features that are not part of a standard toolchain, you can easily customize that toolchain through specification of platform-specific tuning parameters. And, should you need to use a third-party toolchain, mechanisms built into the Yocto Project allow for that.

Mechanism Rules Over Policy: Focusing on mechanism rather than policy ensures that you are free to set policies based on the needs of your design instead of adopting decisions enforced by some system software provider.

Uses a Layer Model: The Yocto Project layer infrastructure groups related functionality into separate bundles. You can incrementally add these grouped functionalities to your project as needed. Using layers to isolate and group functionality reduces project complexity and redundancy, allows you to easily extend the system, make customizations, and keep functionality organized.

Supports Partial Builds: You can build and rebuild individual packages as needed. Yocto Project accomplishes this through its Shared State Cache (sstate) scheme. Being able to build and debug components individually eases project development.

Releases According to a Strict Schedule: Major releases occur on a six-month cycle predictably in October and April. The most recent two releases support point releases to address common vulnerabilities and exposures. This predictability is crucial for projects based on the Yocto Project and allows development teams to plan activities.

Rich Ecosystem of Individuals and Organizations: For open source projects, the value of community is very important. Support forums, expertise, and active developers who continue to push the Yocto Project forward are readily available.

Binary Reproducibility: The Yocto Project allows you to be very specific about dependencies and achieves very high percentages of binary reproducibility (e.g. 99.8% for

core-image-minimal). When distributions are not specific about which packages are pulled in and in what order to support dependencies, other build systems can arbitrarily include packages.License Manifest: The Yocto Project provides a license manifest for review by people who need to track the use of open source licenses (e.g. legal teams).

2.1.2 Challenges

Here are challenges you might encounter when developing using the Yocto Project:

Steep Learning Curve: The Yocto Project has a steep learning curve and has many different ways to accomplish similar tasks. It can be difficult to choose between such ways.

Understanding What Changes You Need to Make For Your Design Requires Some Research: Beyond the simple tutorial stage, understanding what changes need to be made for your particular design can require a significant amount of research and investigation. For information that helps you transition from trying out the Yocto Project to using it for your project, see the “What I wish I’d known about Yocto Project” and “Transitioning to a custom environment for systems development” documents on the Yocto Project website.

Project Workflow Could Be Confusing: The Yocto Project workflow could be confusing if you are used to traditional desktop and server software development. In a desktop development environment, there are mechanisms to easily pull and install new packages, which are typically pre-compiled binaries from servers accessible over the Internet. Using the Yocto Project, you must modify your configuration and rebuild to add additional packages.

Working in a Cross-Build Environment Can Feel Unfamiliar: When developing code to run on a target, compilation, execution, and testing done on the actual target can be faster than running a BitBake build on a development host and then deploying binaries to the target for test. While the Yocto Project does support development tools on the target, the additional step of integrating your changes back into the Yocto Project build environment would be required. Yocto Project supports an intermediate approach that involves making changes on the development system within the BitBake environment and then deploying only the updated packages to the target.

The Yocto Project OpenEmbedded Build System produces packages in standard formats (i.e. RPM, DEB, IPK, and TAR). You can deploy these packages into the running system on the target by using utilities on the target such as

rpmoripk.Initial Build Times Can be Significant: Long initial build times are unfortunately unavoidable due to the large number of packages initially built from scratch for a fully functioning Linux system. Once that initial build is completed, however, the shared-state (sstate) cache mechanism Yocto Project uses keeps the system from rebuilding packages that have not been “touched” since the last build. The sstate mechanism significantly reduces times for successive builds.

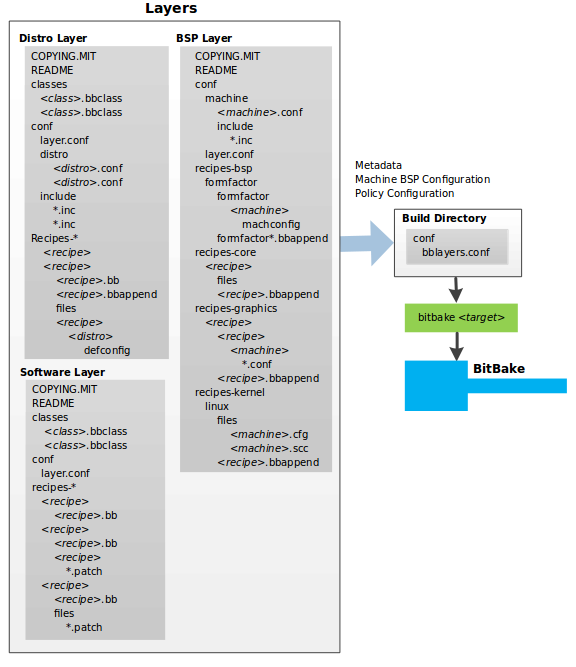

2.2 The Yocto Project Layer Model

The Yocto Project’s “Layer Model” is a development model for embedded and IoT Linux creation that distinguishes the Yocto Project from other simple build systems. The Layer Model simultaneously supports collaboration and customization. Layers are repositories that contain related sets of instructions that tell the OpenEmbedded Build System what to do. You can collaborate, share, and reuse layers.

Layers can contain changes to previous instructions or settings at any time. This powerful override capability is what allows you to customize previously supplied collaborative or community layers to suit your product requirements.

You use different layers to logically separate information in your build. As an example, you could have BSP, GUI, distro configuration, middleware, or application layers. Putting your entire build into one layer limits and complicates future customization and reuse. Isolating information into layers, on the other hand, helps simplify future customizations and reuse. You might find it tempting to keep everything in one layer when working on a single project. However, the more modular your Metadata, the easier it is to cope with future changes.

Note

Use Board Support Package (BSP) layers from silicon vendors when possible.

Familiarize yourself with the Yocto Project Compatible Layers or the OpenEmbedded Layer Index. The latter contains more layers but they are less universally validated.

Layers support the inclusion of technologies, hardware components, and software components. The Yocto Project Compatible designation provides a minimum level of standardization that contributes to a strong ecosystem. “YP Compatible” is applied to appropriate products and software components such as BSPs, other OE-compatible layers, and related open-source projects, allowing the producer to use Yocto Project badges and branding assets.

To illustrate how layers are used to keep things modular, consider

machine customizations. These types of customizations typically reside

in a special layer, rather than a general layer, called a BSP Layer.

Furthermore, the machine customizations should be isolated from recipes

and Metadata that support a new GUI environment, for example. This

situation gives you a couple of layers: one for the machine

configurations, and one for the GUI environment. It is important to

understand, however, that the BSP layer can still make machine-specific

additions to recipes within the GUI environment layer without polluting

the GUI layer itself with those machine-specific changes. You can

accomplish this through a recipe that is a BitBake append

(.bbappend) file, which is described later in this section.

Note

For general information on BSP layer structure, see the Yocto Project Board Support Package Developer’s Guide.

The Source Directory

contains both general layers and BSP layers right out of the box. You

can easily identify layers that ship with a Yocto Project release in the

Source Directory by their names. Layers typically have names that begin

with the string meta-.

Note

It is not a requirement that a layer name begin with the prefix

meta-, but it is a commonly accepted standard in the Yocto Project

community.

For example, if you were to examine the tree view

of the poky repository, you will see several layers: meta,

meta-skeleton, meta-selftest, meta-poky, and

meta-yocto-bsp. Each of these repositories represents a distinct

layer.

For procedures on how to create layers, see the “Understanding and Creating Layers” section in the Yocto Project Development Tasks Manual.

2.3 Components and Tools

The Yocto Project employs a collection of components and tools used by the project itself, by project developers, and by those using the Yocto Project. These components and tools are open source projects and metadata that are separate from the reference distribution (Poky) and the OpenEmbedded Build System. Most of the components and tools are downloaded separately.

This section provides brief overviews of the components and tools associated with the Yocto Project.

2.3.1 Development Tools

Here are tools that help you develop images and applications using the Yocto Project:

CROPS: CROPS is an open source, cross-platform development framework that leverages Docker Containers. CROPS provides an easily managed, extensible environment that allows you to build binaries for a variety of architectures on Windows, Linux and Mac OS X hosts.

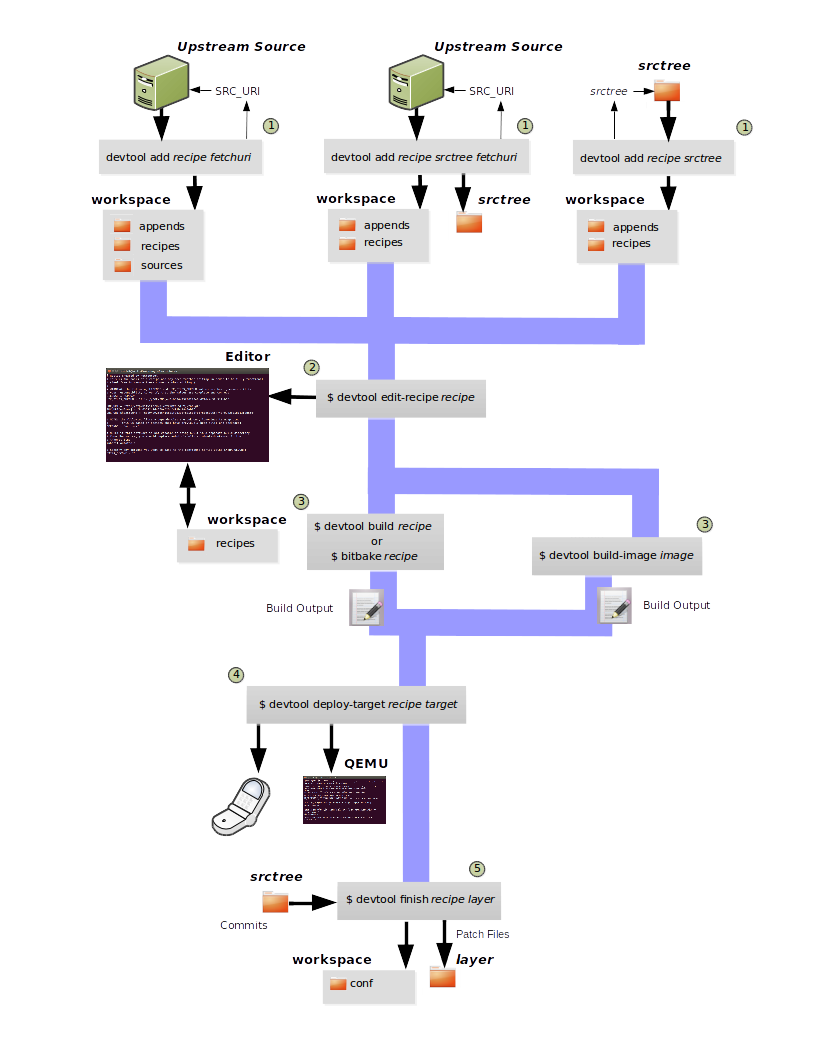

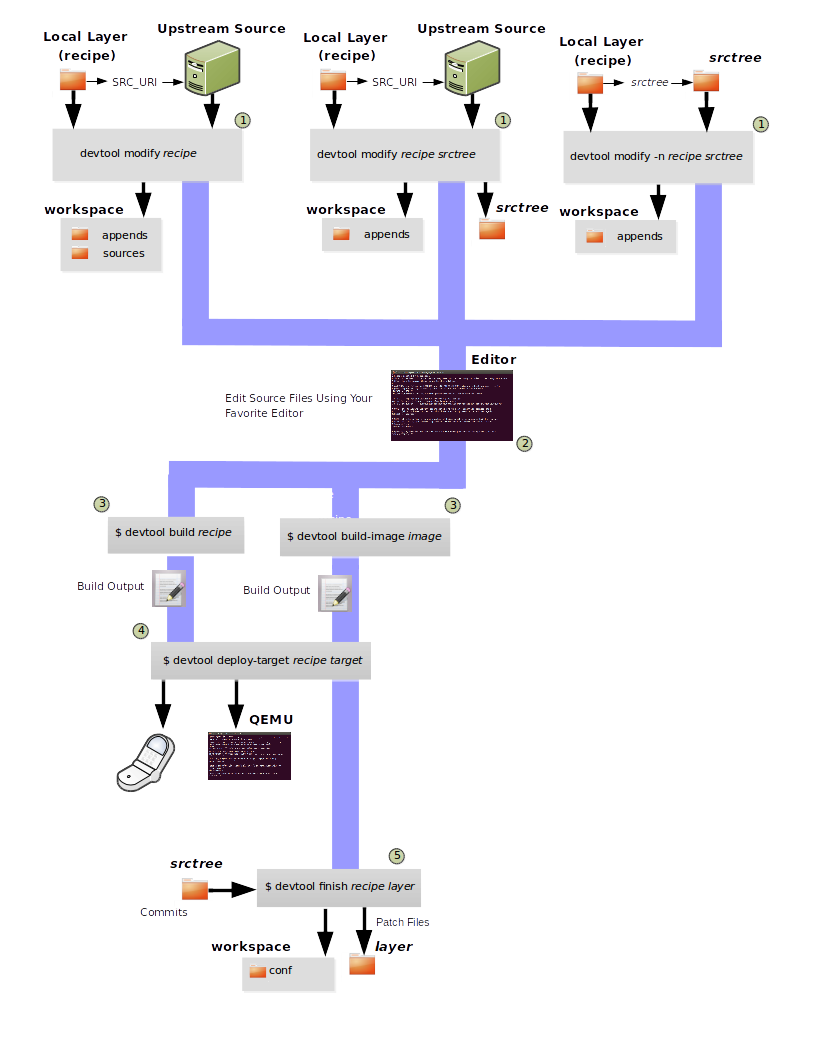

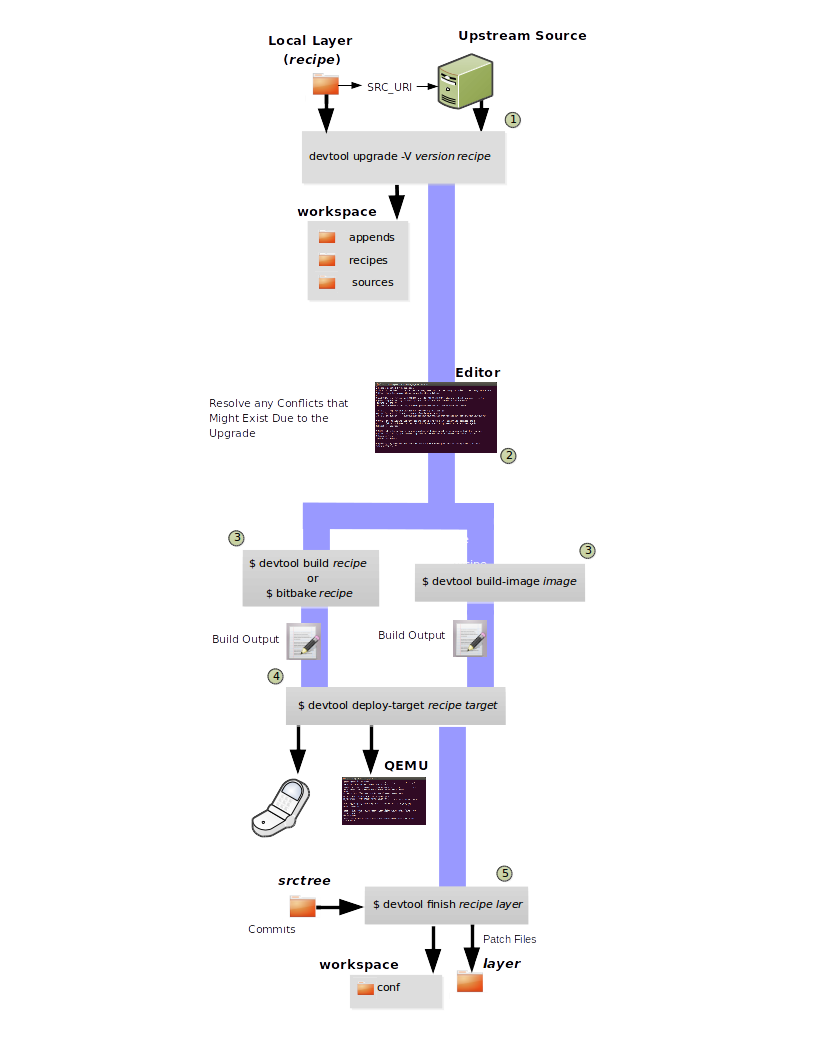

devtool: This command-line tool is available as part of the extensible SDK (eSDK) and is its cornerstone. You can use

devtoolto help build, test, and package software within the eSDK. You can use the tool to optionally integrate what you build into an image built by the OpenEmbedded build system.The

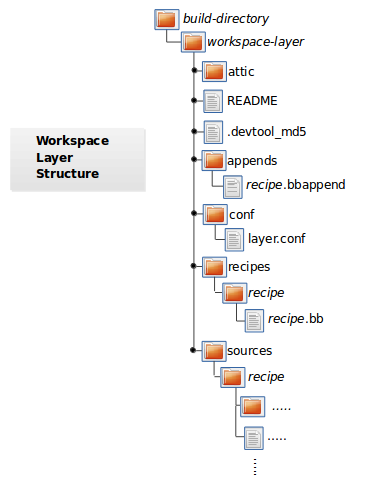

devtoolcommand employs a number of sub-commands that allow you to add, modify, and upgrade recipes. As with the OpenEmbedded build system, “recipes” represent software packages withindevtool. When you usedevtool add, a recipe is automatically created. When you usedevtool modify, the specified existing recipe is used in order to determine where to get the source code and how to patch it. In both cases, an environment is set up so that when you build the recipe a source tree that is under your control is used in order to allow you to make changes to the source as desired. By default, both new recipes and the source go into a “workspace” directory under the eSDK. Thedevtool upgradecommand updates an existing recipe so that you can build it for an updated set of source files.You can read about the

devtoolworkflow in the Yocto Project Application Development and Extensible Software Development Kit (eSDK) Manual in the “Using devtool in Your SDK Workflow” section.Extensible Software Development Kit (eSDK): The eSDK provides a cross-development toolchain and libraries tailored to the contents of a specific image. The eSDK makes it easy to add new applications and libraries to an image, modify the source for an existing component, test changes on the target hardware, and integrate into the rest of the OpenEmbedded build system. The eSDK gives you a toolchain experience supplemented with the powerful set of

devtoolcommands tailored for the Yocto Project environment.For information on the eSDK, see the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) Manual.

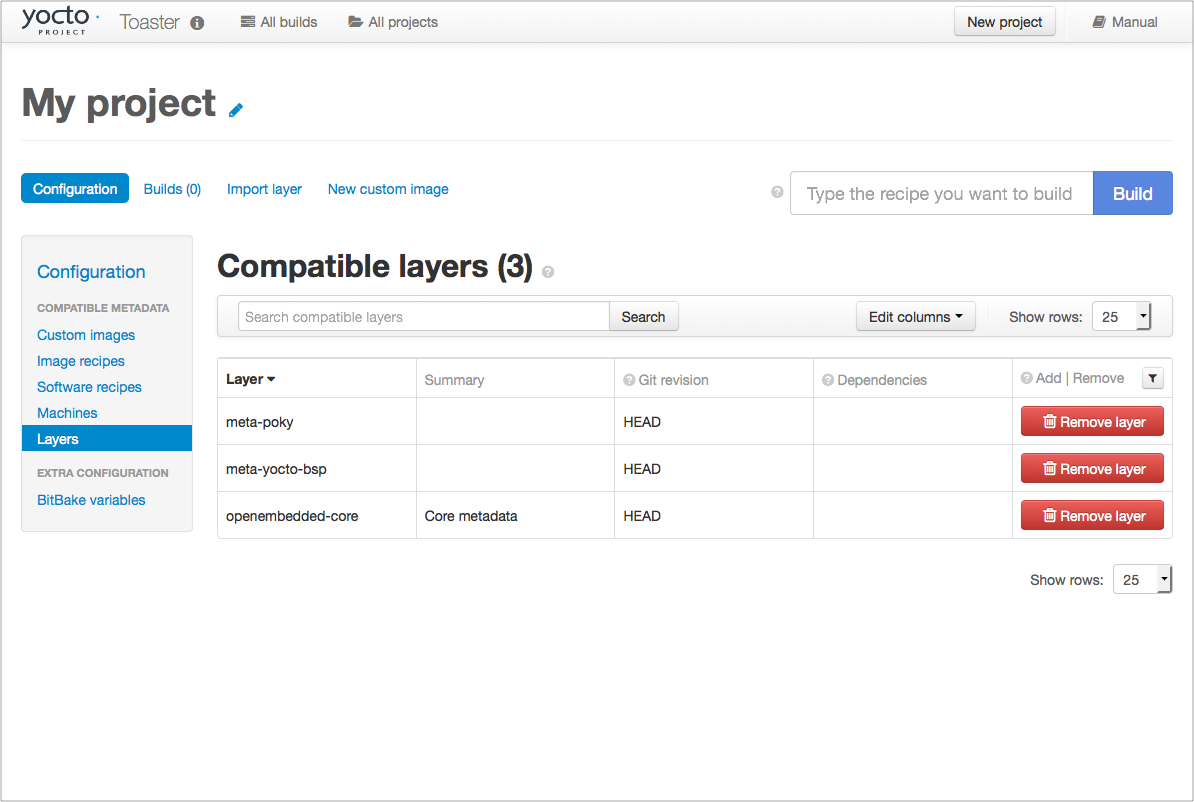

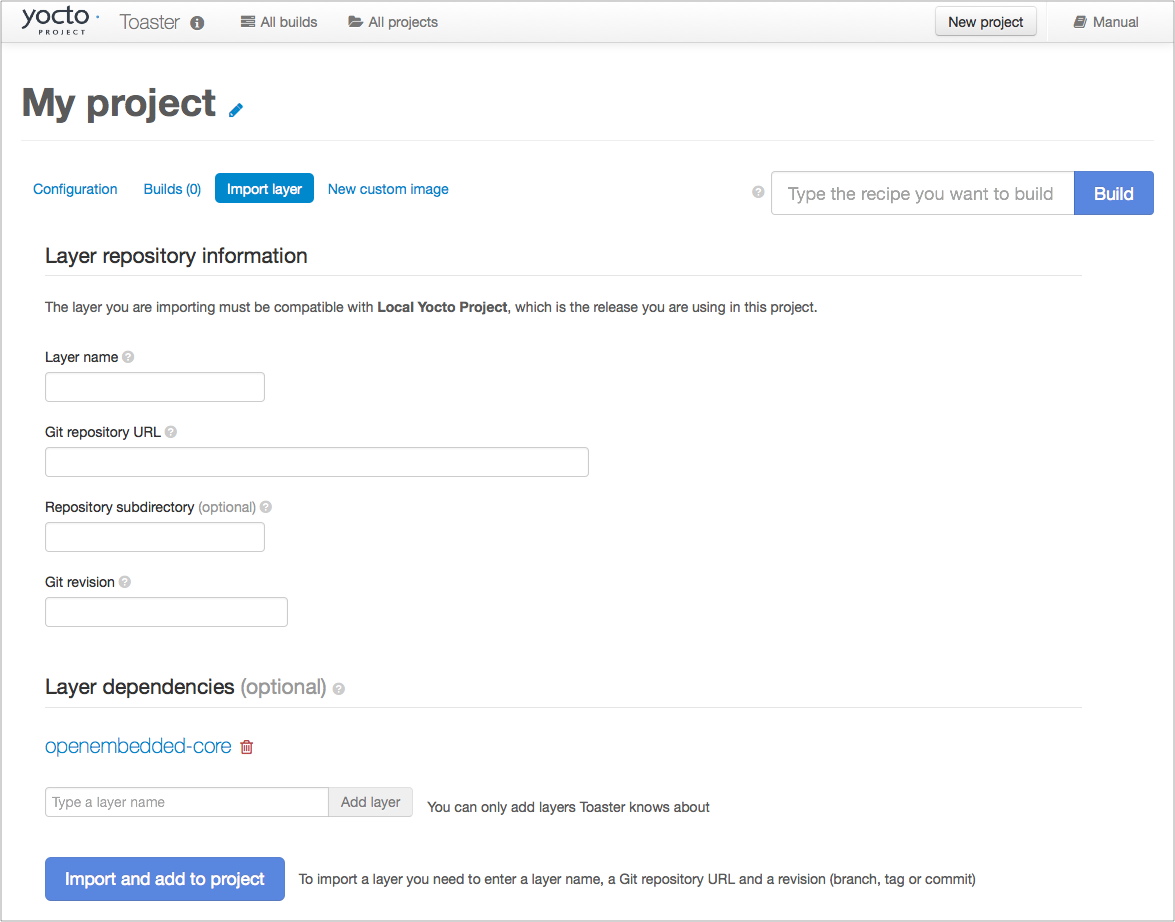

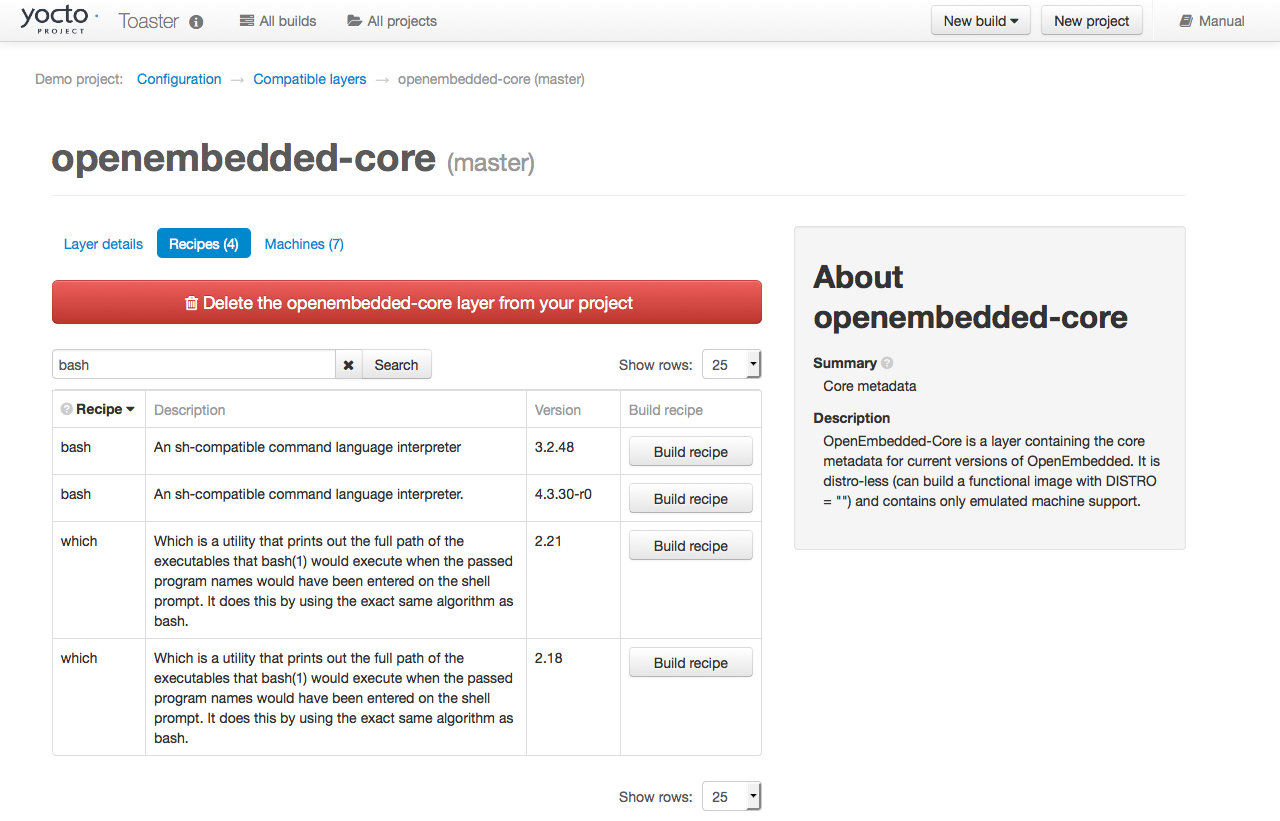

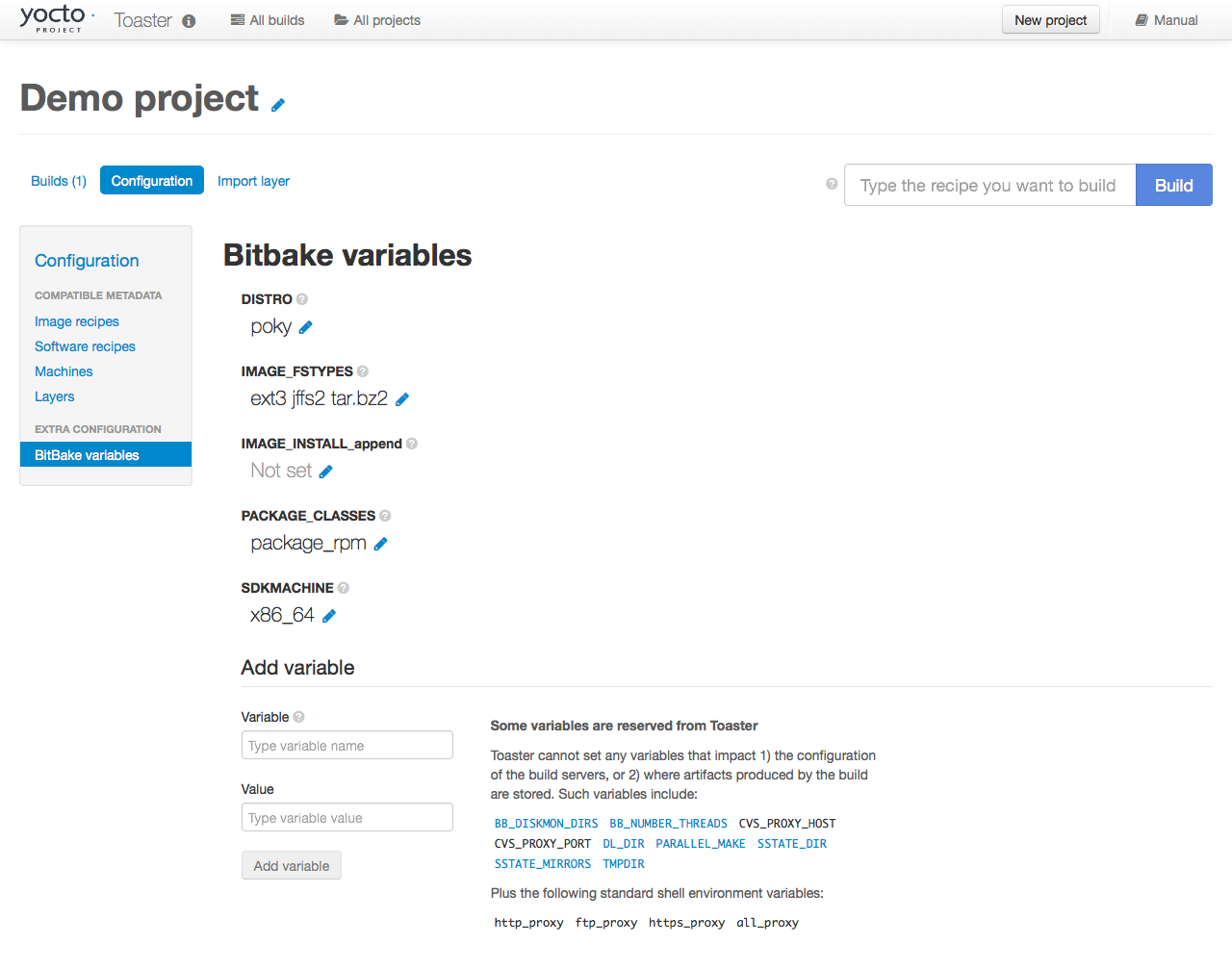

Toaster: Toaster is a web interface to the Yocto Project OpenEmbedded build system. Toaster allows you to configure, run, and view information about builds. For information on Toaster, see the Toaster User Manual.

VSCode IDE Extension: The Yocto Project BitBake extension for Visual Studio Code provides a rich set of features for working with BitBake recipes. The extension provides syntax highlighting, hover tips, and completion for BitBake files as well as embedded Python and Bash languages. Additional views and commands allow you to efficiently browse, build and edit recipes. It also provides SDK integration for cross-compiling and debugging through

devtool.Learn more about the VSCode Extension on the extension’s frontpage.

2.3.2 Production Tools

Here are tools that help with production related activities using the Yocto Project:

Auto Upgrade Helper: This utility when used in conjunction with the OpenEmbedded Build System (BitBake and OE-Core) automatically generates upgrades for recipes that are based on new versions of the recipes published upstream. See Using the Auto Upgrade Helper (AUH) for how to set it up.

Recipe Reporting System: The Recipe Reporting System tracks recipe versions available for Yocto Project. The main purpose of the system is to help you manage the recipes you maintain and to offer a dynamic overview of the project. The Recipe Reporting System is built on top of the OpenEmbedded Layer Index, which is a website that indexes OpenEmbedded-Core layers.

Patchwork: Patchwork is a fork of a project originally started by OzLabs. The project is a web-based tracking system designed to streamline the process of bringing contributions into a project. The Yocto Project uses Patchwork as an organizational tool to handle patches, which number in the thousands for every release.

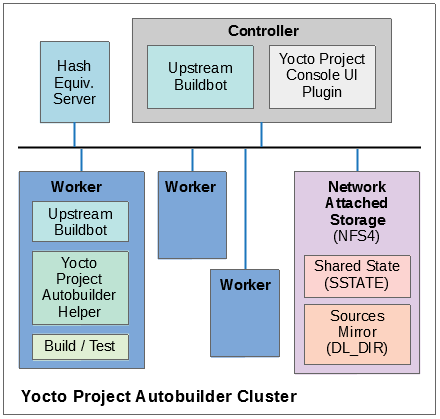

AutoBuilder: AutoBuilder is a project that automates build tests and quality assurance (QA). By using the public AutoBuilder, anyone can determine the status of the current development branch of Poky.

Note

AutoBuilder is based on buildbot.

A goal of the Yocto Project is to lead the open source industry with a project that automates testing and QA procedures. In doing so, the project encourages a development community that publishes QA and test plans, publicly demonstrates QA and test plans, and encourages development of tools that automate and test and QA procedures for the benefit of the development community.

You can learn more about the AutoBuilder used by the Yocto Project Autobuilder here.

Pseudo: Pseudo is the Yocto Project implementation of fakeroot, which is used to run commands in an environment that seemingly has root privileges.

During a build, it can be necessary to perform operations that require system administrator privileges. For example, file ownership or permissions might need to be defined. Pseudo is a tool that you can either use directly or through the environment variable

LD_PRELOAD. Either method allows these operations to succeed even without system administrator privileges.Thanks to Pseudo, the Yocto Project never needs root privileges to build images for your target system.

You can read more about Pseudo in the “Fakeroot and Pseudo” section.

2.3.3 Open-Embedded Build System Components

Here are components associated with the OpenEmbedded Build System:

BitBake: BitBake is a core component of the Yocto Project and is used by the OpenEmbedded build system to build images. While BitBake is key to the build system, BitBake is maintained separately from the Yocto Project.

BitBake is a generic task execution engine that allows shell and Python tasks to be run efficiently and in parallel while working within complex inter-task dependency constraints. In short, BitBake is a build engine that works through recipes written in a specific format in order to perform sets of tasks.

You can learn more about BitBake in the BitBake User Manual.

OpenEmbedded-Core: OpenEmbedded-Core (OE-Core) is a common layer of metadata (i.e. recipes, classes, and associated files) used by OpenEmbedded-derived systems, which includes the Yocto Project. The Yocto Project and the OpenEmbedded Project both maintain the OpenEmbedded-Core. You can find the OE-Core metadata in the Yocto Project Source Repositories.

Historically, the Yocto Project integrated the OE-Core metadata throughout the Yocto Project source repository reference system (Poky). After Yocto Project Version 1.0, the Yocto Project and OpenEmbedded agreed to work together and share a common core set of metadata (OE-Core), which contained much of the functionality previously found in Poky. This collaboration achieved a long-standing OpenEmbedded objective for having a more tightly controlled and quality-assured core. The results also fit well with the Yocto Project objective of achieving a smaller number of fully featured tools as compared to many different ones.

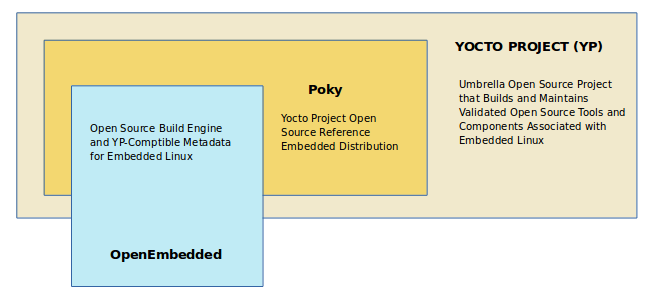

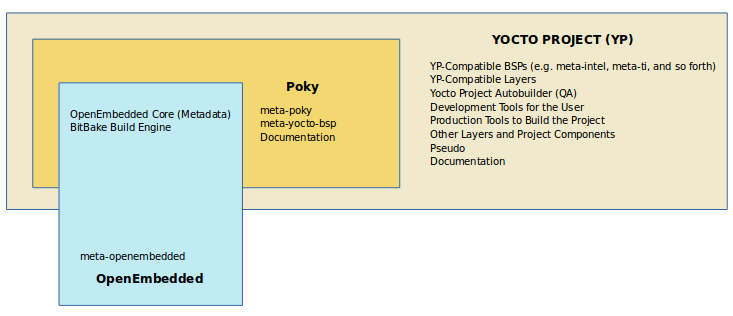

Sharing a core set of metadata results in Poky as an integration layer on top of OE-Core. You can see that in this figure. The Yocto Project combines various components such as BitBake, OE-Core, script “glue”, and documentation for its build system.

2.3.4 Reference Distribution (Poky)

Poky is the Yocto Project reference distribution. It contains the OpenEmbedded Build System (BitBake and OE-Core) as well as a set of metadata to get you started building your own distribution. See the figure in “What is the Yocto Project?” section for an illustration that shows Poky and its relationship with other parts of the Yocto Project.

To use the Yocto Project tools and components, you can download

(clone) Poky and use it to bootstrap your own distribution.

Note

Poky does not contain binary files. It is a working example of how to build your own custom Linux distribution from source.

You can read more about Poky in the “Reference Embedded Distribution (Poky)” section.

2.3.5 Packages for Finished Targets

Here are components associated with packages for finished targets:

Matchbox: Matchbox is an Open Source, base environment for the X Window System running on non-desktop, embedded platforms such as handhelds, set-top boxes, kiosks, and anything else for which screen space, input mechanisms, or system resources are limited.

Matchbox consists of a number of interchangeable and optional applications that you can tailor to a specific, non-desktop platform to enhance usability in constrained environments.

You can find the Matchbox source in the Yocto Project Source Repositories.

Opkg: Open PacKaGe management (opkg) is a lightweight package management system based on the itsy package (ipkg) management system. Opkg is written in C and resembles Advanced Package Tool (APT) and Debian Package (dpkg) in operation.

Opkg is intended for use on embedded Linux devices and is used in this capacity in the OpenEmbedded and OpenWrt projects, as well as the Yocto Project.

Note

As best it can, opkg maintains backwards compatibility with ipkg and conforms to a subset of Debian’s policy manual regarding control files.

You can find the opkg source in the Yocto Project Source Repositories.

2.3.6 Archived Components

The Build Appliance is a virtual machine image that enables you to build and boot a custom embedded Linux image with the Yocto Project using a non-Linux development system.

Historically, the Build Appliance was the second of three methods by which you could use the Yocto Project on a system that was not native to Linux.

Hob: Hob, which is now deprecated and is no longer available since the 2.1 release of the Yocto Project provided a rudimentary, GUI-based interface to the Yocto Project. Toaster has fully replaced Hob.

Build Appliance: Post Hob, the Build Appliance became available. It was never recommended that you use the Build Appliance as a day-to-day production development environment with the Yocto Project. Build Appliance was useful as a way to try out development in the Yocto Project environment.

CROPS: The final and best solution available now for developing using the Yocto Project on a system not native to Linux is with CROPS.

2.4 Development Methods

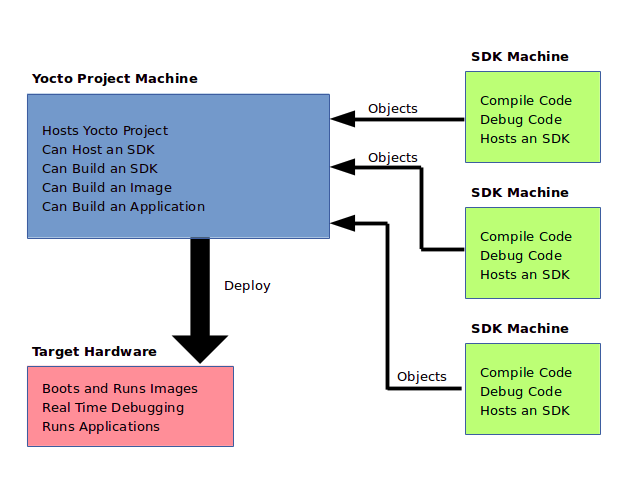

The Yocto Project development environment usually involves a Build Host and target hardware. You use the Build Host to build images and develop applications, while you use the target hardware to execute deployed software.

This section provides an introduction to the choices or development methods you have when setting up your Build Host. Depending on your particular workflow preference and the type of operating system your Build Host runs, you have several choices.

Note

For additional detail about the Yocto Project development environment, see the “The Yocto Project Development Environment” chapter.

Native Linux Host: By far the best option for a Build Host. A system running Linux as its native operating system allows you to develop software by directly using the BitBake tool. You can accomplish all aspects of development from a regular shell in a supported Linux distribution.

For information on how to set up a Build Host on a system running Linux as its native operating system, see the “Setting Up a Native Linux Host” section in the Yocto Project Development Tasks Manual.

CROss PlatformS (CROPS): Typically, you use CROPS, which leverages Docker Containers, to set up a Build Host that is not running Linux (e.g. Microsoft Windows or macOS).

Note

You can, however, use CROPS on a Linux-based system.

CROPS is an open source, cross-platform development framework that provides an easily managed, extensible environment for building binaries targeted for a variety of architectures on Windows, macOS, or Linux hosts. Once the Build Host is set up using CROPS, you can prepare a shell environment to mimic that of a shell being used on a system natively running Linux.

For information on how to set up a Build Host with CROPS, see the “Setting Up to Use CROss PlatformS (CROPS)” section in the Yocto Project Development Tasks Manual.

Windows Subsystem For Linux (WSL 2): You may use Windows Subsystem For Linux version 2 to set up a Build Host using Windows 10 or later, or Windows Server 2019 or later.

The Windows Subsystem For Linux allows Windows to run a real Linux kernel inside of a lightweight virtual machine (VM).

For information on how to set up a Build Host with WSL 2, see the “Setting Up to Use Windows Subsystem For Linux (WSL 2)” section in the Yocto Project Development Tasks Manual.

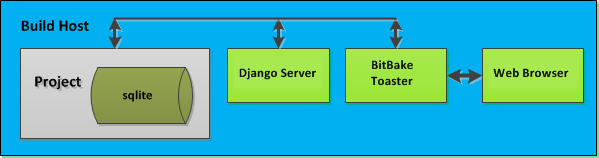

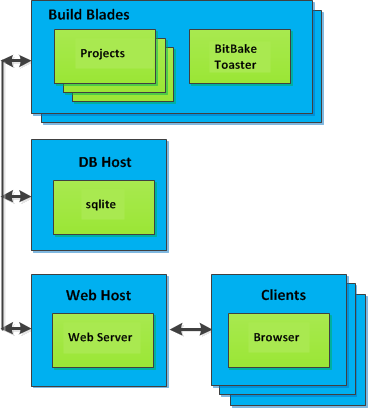

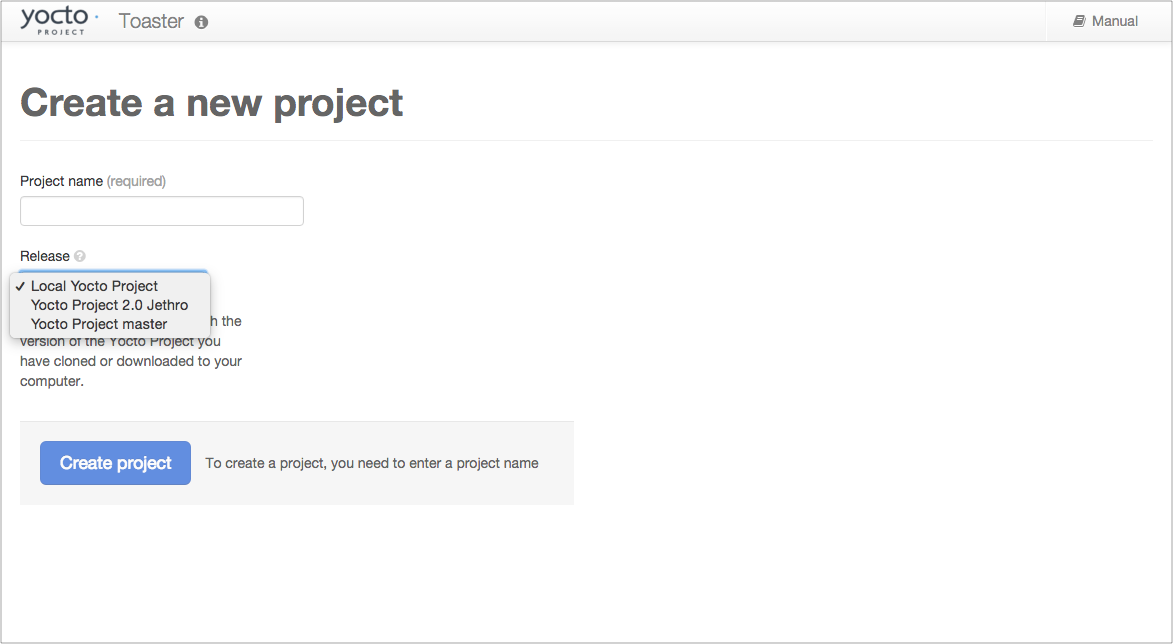

Toaster: Regardless of what your Build Host is running, you can use Toaster to develop software using the Yocto Project. Toaster is a web interface to the Yocto Project’s OpenEmbedded Build System. The interface allows you to configure and run your builds. Information about builds is collected and stored in a database. You can use Toaster to configure and start builds on multiple remote build servers.

For information about and how to use Toaster, see the Toaster User Manual.

Using the VSCode Extension: You can use the Yocto Project BitBake extension for Visual Studio Code to start your BitBake builds through a graphical user interface.

Learn more about the VSCode Extension on the extension’s marketplace page

2.5 Reference Embedded Distribution (Poky)

“Poky”, which is pronounced Pock-ee, is the name of the Yocto Project’s reference distribution or Reference OS Kit. Poky contains the OpenEmbedded Build System (BitBake and OpenEmbedded-Core (OE-Core)) as well as a set of Metadata to get you started building your own distro. In other words, Poky is a base specification of the functionality needed for a typical embedded system as well as the components from the Yocto Project that allow you to build a distribution into a usable binary image.

Poky is a combined repository of BitBake, OpenEmbedded-Core (which is

found in meta), meta-poky, meta-yocto-bsp, and documentation

provided all together and known to work well together. You can view

these items that make up the Poky repository in the

Source Repositories.

Note

If you are interested in all the contents of the poky Git repository, see the “Top-Level Core Components” section in the Yocto Project Reference Manual.

The following figure illustrates what generally comprises Poky:

BitBake is a task executor and scheduler that is the heart of the OpenEmbedded build system.

meta-poky, which is Poky-specific metadata.meta-yocto-bsp, which are Yocto Project-specific Board Support Packages (BSPs).OpenEmbedded-Core (OE-Core) metadata, which includes shared configurations, global variable definitions, shared classes, packaging, and recipes. Classes define the encapsulation and inheritance of build logic. Recipes are the logical units of software and images to be built.

Documentation, which contains the Yocto Project source files used to make the set of user manuals.

Note

While Poky is a “complete” distribution specification and is tested and put through QA, you cannot use it as a product “out of the box” in its current form.

To use the Yocto Project tools, you can use Git to clone (download) the Poky repository then use your local copy of the reference distribution to bootstrap your own distribution.

Note

Poky does not contain binary files. It is a working example of how to build your own custom Linux distribution from source.

Poky has a regular, well established, six-month release cycle under its own version. Major releases occur at the same time major releases (point releases) occur for the Yocto Project, which are typically in the Spring and Fall. For more information on the Yocto Project release schedule and cadence, see the “Yocto Project Releases and the Stable Release Process” chapter in the Yocto Project Reference Manual.

Much has been said about Poky being a “default configuration”. A default configuration provides a starting image footprint. You can use Poky out of the box to create an image ranging from a shell-accessible minimal image all the way up to a Linux Standard Base-compliant image that uses a GNOME Mobile and Embedded (GMAE) based reference user interface called Sato.

One of the most powerful properties of Poky is that every aspect of a build is controlled by the metadata. You can use metadata to augment these base image types by adding metadata layers that extend functionality. These layers can provide, for example, an additional software stack for an image type, add a board support package (BSP) for additional hardware, or even create a new image type.

Metadata is loosely grouped into configuration files or package recipes.

A recipe is a collection of non-executable metadata used by BitBake to

set variables or define additional build-time tasks. A recipe contains

fields such as the recipe description, the recipe version, the license

of the package and the upstream source repository. A recipe might also

indicate that the build process uses autotools, make, distutils or any

other build process, in which case the basic functionality can be

defined by the classes it inherits from the OE-Core layer’s class

definitions in ./meta/classes. Within a recipe you can also define

additional tasks as well as task prerequisites. Recipe syntax through

BitBake also supports both :prepend and :append operators as a

method of extending task functionality. These operators inject code into

the beginning or end of a task. For information on these BitBake

operators, see the

“Appending and Prepending (Override Style Syntax)”

section in the BitBake User’s Manual.

2.6 The OpenEmbedded Build System Workflow

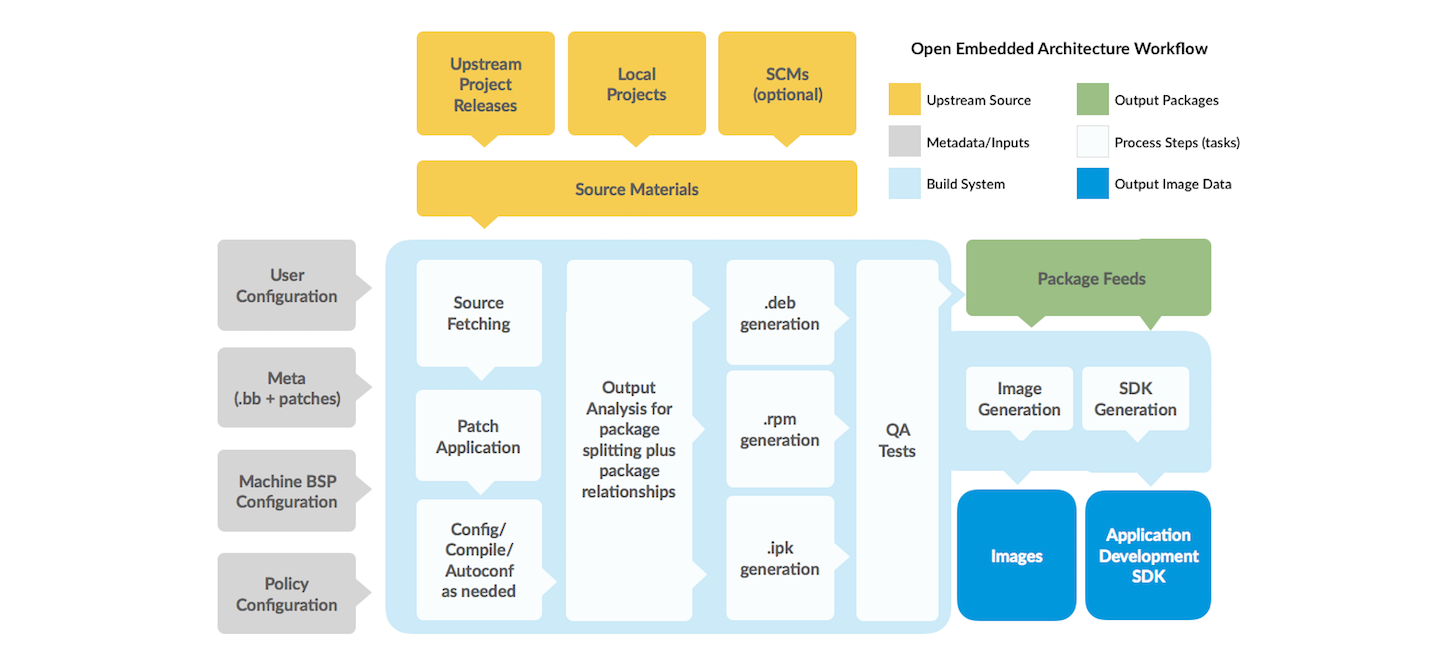

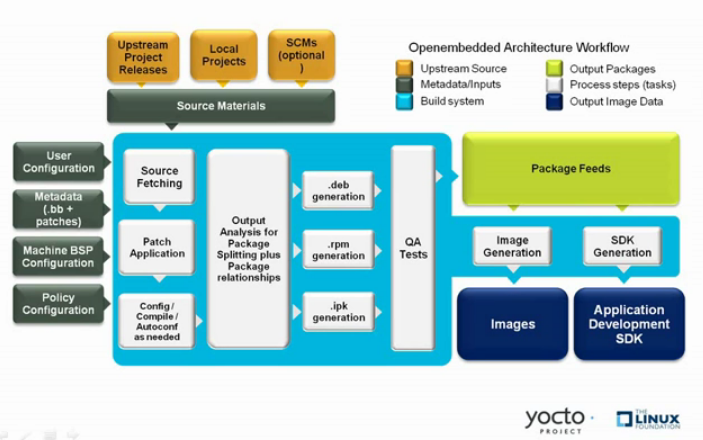

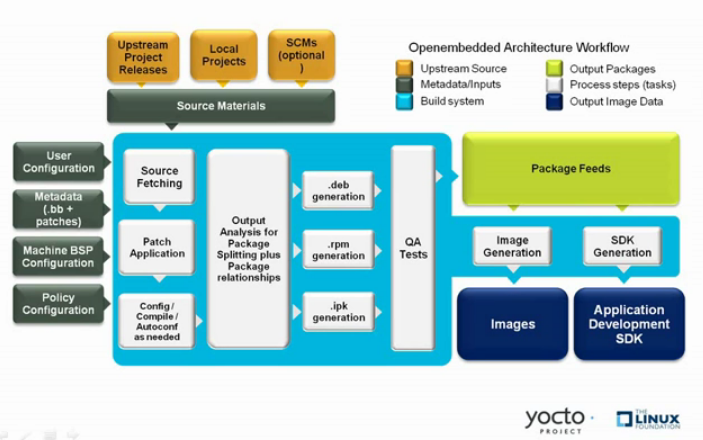

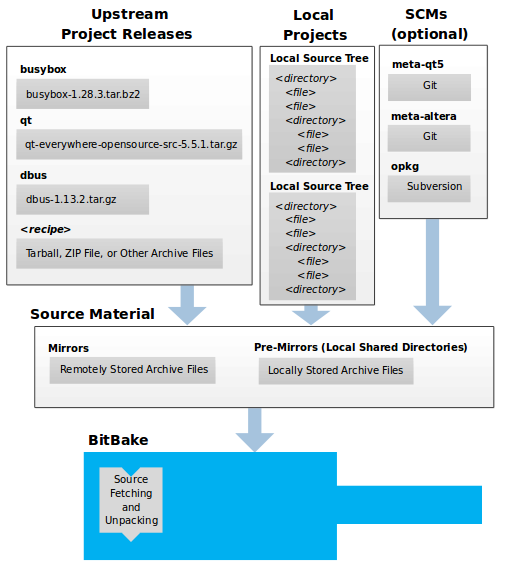

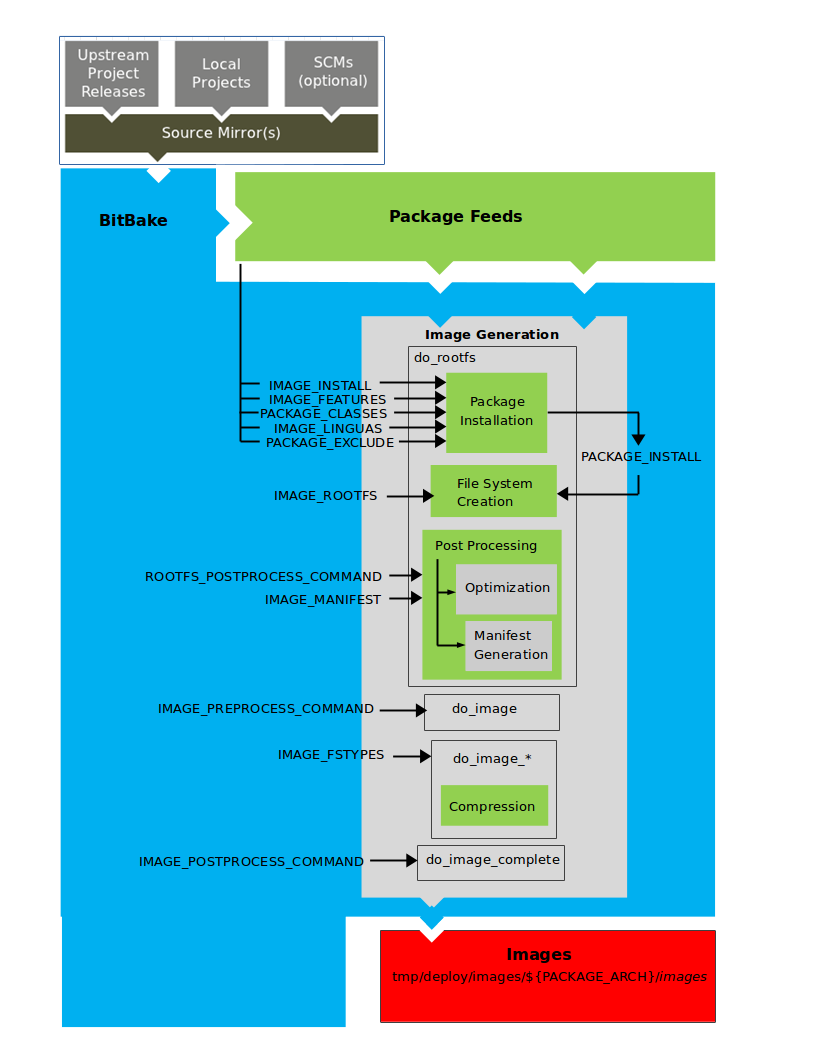

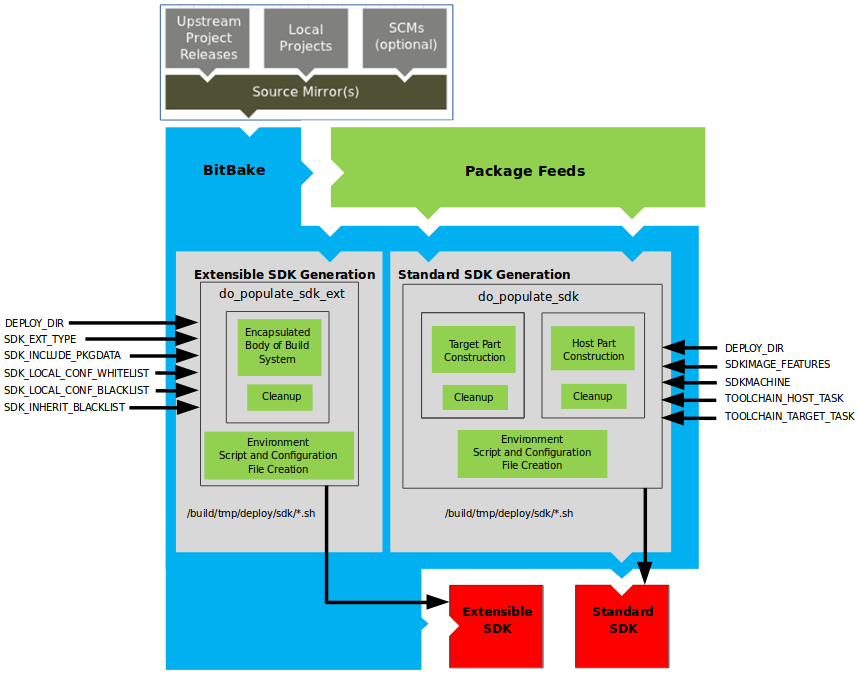

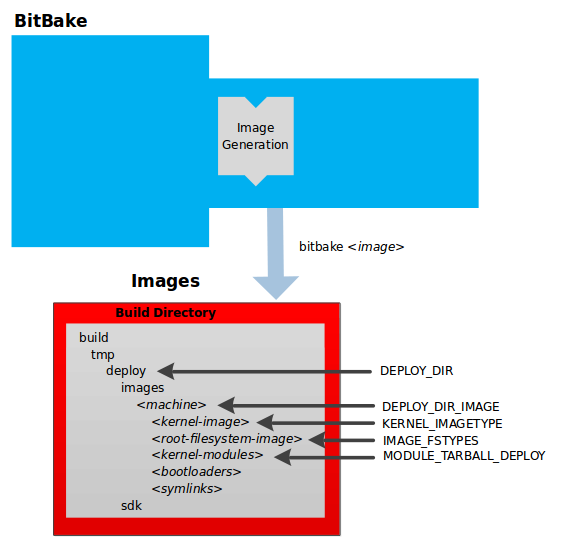

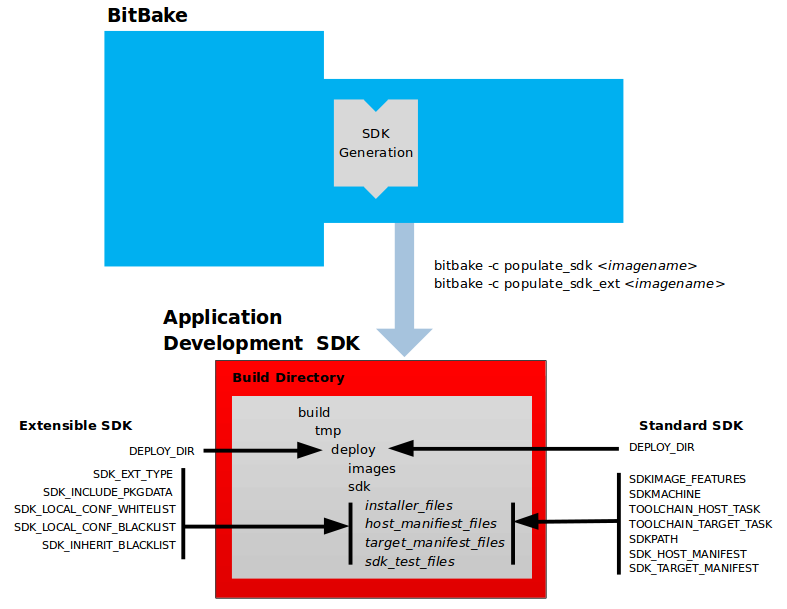

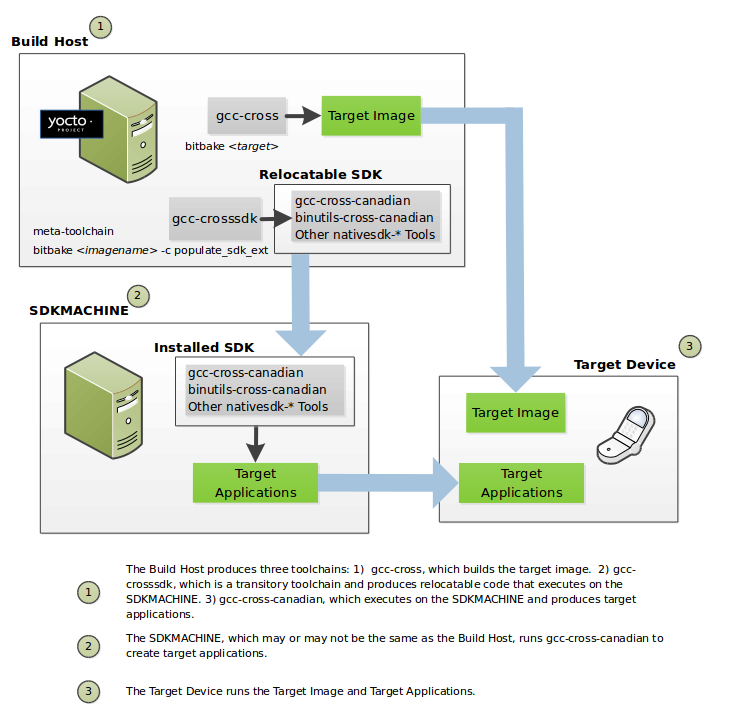

The OpenEmbedded Build System uses a “workflow” to accomplish image and SDK generation. The following figure overviews that workflow:

Here is a brief summary of the “workflow”:

Developers specify architecture, policies, patches and configuration details.

The build system fetches and downloads the source code from the specified location. The build system supports standard methods such as tarballs or source code repositories systems such as Git.

Once source code is downloaded, the build system extracts the sources into a local work area where patches are applied and common steps for configuring and compiling the software are run.

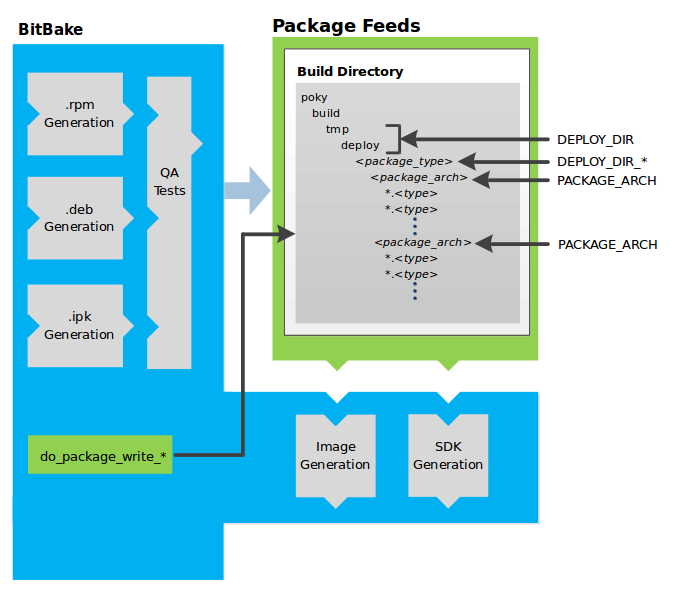

The build system then installs the software into a temporary staging area where the binary package format you select (DEB, RPM, or IPK) is used to roll up the software.

Different QA and sanity checks run throughout entire build process.

After the binaries are created, the build system generates a binary package feed that is used to create the final root file image.

The build system generates the file system image and a customized Extensible SDK (eSDK) for application development in parallel.

For a very detailed look at this workflow, see the “OpenEmbedded Build System Concepts” section.

2.7 Some Basic Terms

It helps to understand some basic fundamental terms when learning the Yocto Project. Although there is a list of terms in the “Yocto Project Terms” section of the Yocto Project Reference Manual, this section provides the definitions of some terms helpful for getting started:

Configuration Files: Files that hold global definitions of variables, user-defined variables, and hardware configuration information. These files tell the OpenEmbedded Build System what to build and what to put into the image to support a particular platform.

Extensible Software Development Kit (eSDK): A custom SDK for application developers. This eSDK allows developers to incorporate their library and programming changes back into the image to make their code available to other application developers. For information on the eSDK, see the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual.

Layer: A collection of related recipes. Layers allow you to consolidate related metadata to customize your build. Layers also isolate information used when building for multiple architectures. Layers are hierarchical in their ability to override previous specifications. You can include any number of available layers from the Yocto Project and customize the build by adding your own layers after them. You can search the Layer Index for layers used within Yocto Project.

For more detailed information on layers, see the “Understanding and Creating Layers” section in the Yocto Project Development Tasks Manual. For a discussion specifically on BSP Layers, see the “BSP Layers” section in the Yocto Project Board Support Packages (BSP) Developer’s Guide.

Metadata: A key element of the Yocto Project is the Metadata that is used to construct a Linux distribution and is contained in the files that the OpenEmbedded build system parses when building an image. In general, Metadata includes recipes, configuration files, and other information that refers to the build instructions themselves, as well as the data used to control what things get built and the effects of the build. Metadata also includes commands and data used to indicate what versions of software are used, from where they are obtained, and changes or additions to the software itself (patches or auxiliary files) that are used to fix bugs or customize the software for use in a particular situation. OpenEmbedded-Core is an important set of validated metadata.

OpenEmbedded Build System: The terms “BitBake” and “build system” are sometimes used for the OpenEmbedded Build System.

BitBake is a task scheduler and execution engine that parses instructions (i.e. recipes) and configuration data. After a parsing phase, BitBake creates a dependency tree to order the compilation, schedules the compilation of the included code, and finally executes the building of the specified custom Linux image (distribution). BitBake is similar to the

maketool.During a build process, the build system tracks dependencies and performs a native or cross-compilation of each package. As a first step in a cross-build setup, the framework attempts to create a cross-compiler toolchain (i.e. Extensible SDK) suited for the target platform.

OpenEmbedded-Core (OE-Core): OE-Core is metadata comprised of foundation recipes, classes, and associated files that are meant to be common among many different OpenEmbedded-derived systems, including the Yocto Project. OE-Core is a curated subset of an original repository developed by the OpenEmbedded community that has been pared down into a smaller, core set of continuously validated recipes. The result is a tightly controlled and quality-assured core set of recipes.

You can see the Metadata in the

metadirectory of the Yocto Project Source Repositories.Packages: In the context of the Yocto Project, this term refers to a recipe’s packaged output produced by BitBake (i.e. a “baked recipe”). A package is generally the compiled binaries produced from the recipe’s sources. You “bake” something by running it through BitBake.

It is worth noting that the term “package” can, in general, have subtle meanings. For example, the packages referred to in the “Required Packages for the Build Host” section in the Yocto Project Reference Manual are compiled binaries that, when installed, add functionality to your host Linux distribution.

Another point worth noting is that historically within the Yocto Project, recipes were referred to as packages — thus, the existence of several BitBake variables that are seemingly mis-named, (e.g. PR, PV, and PE).

Poky: Poky is a reference embedded distribution and a reference test configuration. Poky provides the following:

A base-level functional distro used to illustrate how to customize a distribution.

A means by which to test the Yocto Project components (i.e. Poky is used to validate the Yocto Project).

A vehicle through which you can download the Yocto Project.

Poky is not a product level distro. Rather, it is a good starting point for customization.

Note

Poky is an integration layer on top of OE-Core.

Recipe: The most common form of metadata. A recipe contains a list of settings and tasks (i.e. instructions) for building packages that are then used to build the binary image. A recipe describes where you get source code and which patches to apply. Recipes describe dependencies for libraries or for other recipes as well as configuration and compilation options. Related recipes are consolidated into a layer.

3 The Yocto Project Development Environment

This chapter takes a look at the Yocto Project development environment. The chapter provides Yocto Project Development environment concepts that help you understand how work is accomplished in an open source environment, which is very different as compared to work accomplished in a closed, proprietary environment.

Specifically, this chapter addresses open source philosophy, source repositories, workflows, Git, and licensing.

3.1 Open Source Philosophy

Open source philosophy is characterized by software development directed by peer production and collaboration through an active community of developers. Contrast this to the more standard centralized development models used by commercial software companies where a finite set of developers produces a product for sale using a defined set of procedures that ultimately result in an end product whose architecture and source material are closed to the public.

Open source projects conceptually have differing concurrent agendas, approaches, and production. These facets of the development process can come from anyone in the public (community) who has a stake in the software project. The open source environment contains new copyright, licensing, domain, and consumer issues that differ from the more traditional development environment. In an open source environment, the end product, source material, and documentation are all available to the public at no cost.

A benchmark example of an open source project is the Linux kernel, which was initially conceived and created by Finnish computer science student Linus Torvalds in 1991. Conversely, a good example of a non-open source project is the Windows family of operating systems developed by Microsoft Corporation.

Wikipedia has a good historical description of the Open Source Philosophy. You can also find helpful information on how to participate in the Linux Community here.

3.2 The Development Host

A development host or Build Host is key to using the Yocto Project. Because the goal of the Yocto Project is to develop images or applications that run on embedded hardware, development of those images and applications generally takes place on a system not intended to run the software — the development host.

You need to set up a development host in order to use it with the Yocto Project. Most find that it is best to have a native Linux machine function as the development host. However, it is possible to use a system that does not run Linux as its operating system as your development host. When you have a Mac or Windows-based system, you can set it up as the development host by using CROPS, which leverages Docker Containers. Once you take the steps to set up a CROPS machine, you effectively have access to a shell environment that is similar to what you see when using a Linux-based development host. For the steps needed to set up a system using CROPS, see the “Setting Up to Use CROss PlatformS (CROPS)” section in the Yocto Project Development Tasks Manual.

If your development host is going to be a system that runs a Linux distribution, you must still take steps to prepare the system for use with the Yocto Project. You need to be sure that the Linux distribution on the system is one that supports the Yocto Project. You also need to be sure that the correct set of host packages are installed that allow development using the Yocto Project. For the steps needed to set up a development host that runs Linux, see the “Setting Up a Native Linux Host” section in the Yocto Project Development Tasks Manual.

Once your development host is set up to use the Yocto Project, there are several ways of working in the Yocto Project environment:

Command Lines, BitBake, and Shells: Traditional development in the Yocto Project involves using the OpenEmbedded Build System, which uses BitBake, in a command-line environment from a shell on your development host. You can accomplish this from a host that is a native Linux machine or from a host that has been set up with CROPS. Either way, you create, modify, and build images and applications all within a shell-based environment using components and tools available through your Linux distribution and the Yocto Project.